Adversarial machine learning – understanding vulnerabilities in ml models: adversarial machine learning examines vulnerabilities in ml models. We will explore the concept of adversarial machine learning and how it can impact the security of ml models.

Adversarial attacks, such as data poisoning and model evasion, can exploit weaknesses in ml algorithms, leading to inaccurate predictions and compromised systems. Understanding these vulnerabilities is crucial in developing robust and resilient ml models. This article will provide insights into the different types of adversarial attacks, their impact on ml model security, and strategies to mitigate and defend against these attacks.

Stay tuned as we dive deeper into the world of adversarial machine learning.

Credit: mindsdb.com

Understanding Adversarial Attacks

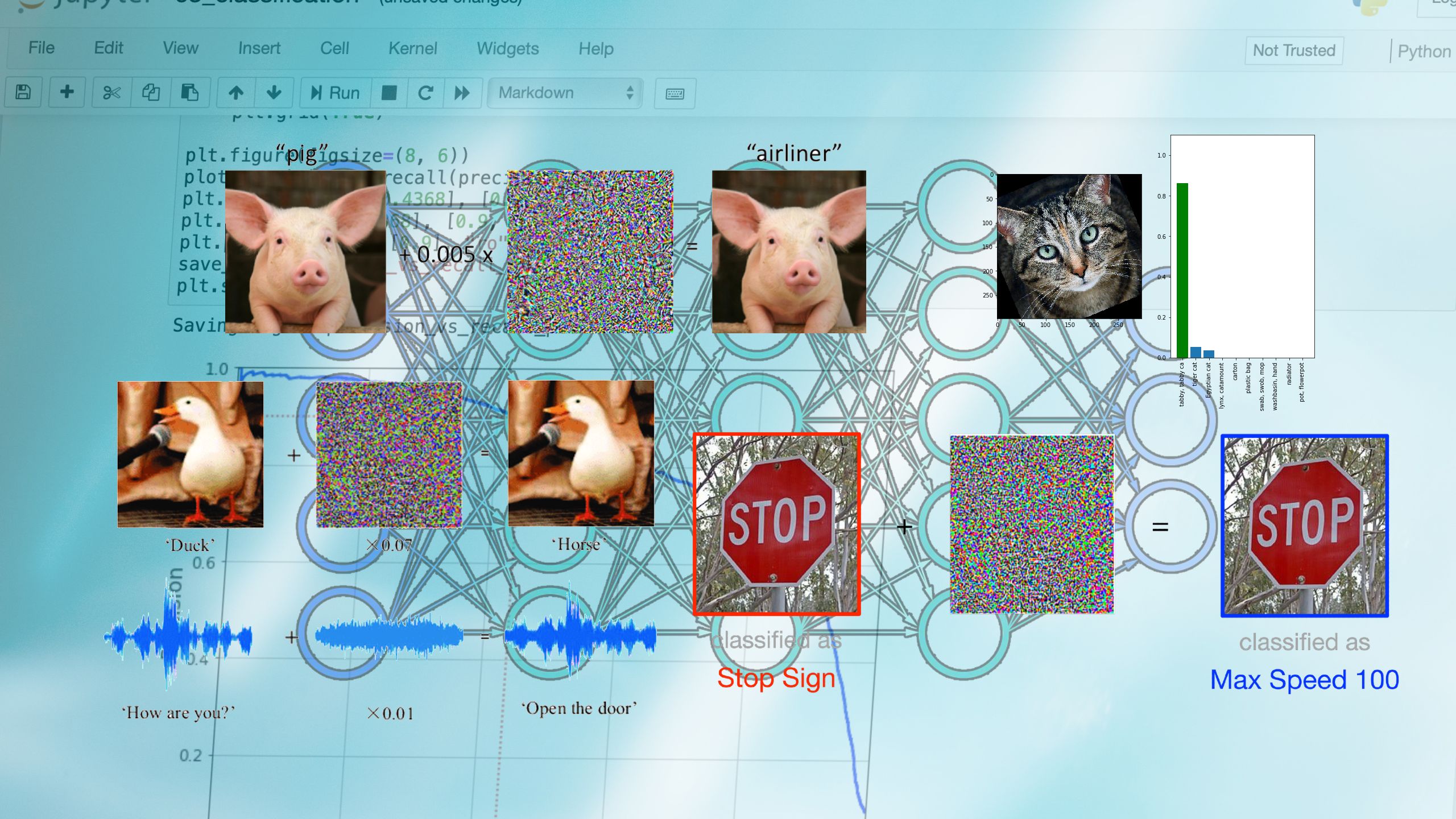

Imagine a scenario where an autonomous vehicle suddenly misidentifies a stop sign as a speed limit sign, leading to potentially disastrous consequences. Or consider a situation where a voice recognition software mistakenly interprets a harmless command as a request to perform a critical action.

These are just a few examples of the potential vulnerabilities that exist in machine learning (ml) models due to adversarial attacks. In this section, we will delve into the definition and concept of adversarial attacks, their potential impacts on ml models, and real-life examples of successful attacks.

Definition And Concept Of Adversarial Attacks

- Adversarial attacks refer to deliberate attempts to manipulate ml models by inputting specially crafted inputs that can cause the model to produce incorrect or unexpected outputs.

- The concept revolves around exploiting the weaknesses and blind spots in ml models with the aim of deceiving or misleading them.

- Adversarial attacks can take various forms, including injecting subtle variations in input data, perturbing pixel values in images, or modifying specific feature values in input vectors.

- These attacks usually target the vulnerabilities in ml models’ decision boundaries and can be executed even when the attacker has limited knowledge about the model’s architecture or training data.

Potential Impacts Of Adversarial Attacks On Ml Models

- Adversarial attacks can have significant consequences and pose serious threats to the trustworthiness and reliability of ml models.

- Ml models that fall prey to adversarial attacks may produce erroneous predictions, leading to potential financial losses, security breaches, or even physical harm.

- The impacts of these attacks can extend beyond specific industries, affecting a wide range of applications such as autonomous vehicles, medical diagnosis systems, speech recognition, and cybersecurity.

- Adversarial attacks not only undermine the performance of ml models but also raise concerns about privacy and ethical implications associated with their usage.

Real-Life Examples Of Successful Adversarial Attacks

- A classic example of an adversarial attack is the manipulation of image recognition systems. Researchers have demonstrated how subtle perturbations added to an image can lead to misclassification by state-of-the-art ml models.

- In 2019, a group of researchers showed that adding stickers to a stop sign in the real world, strategically designed to confuse ml models, resulted in misclassification of the stop sign as a speed limit sign by autonomous vehicles.

- Speech recognition systems are also susceptible to adversarial attacks. By embedding carefully designed noise or imperceptible voice modifications, attackers can make the system interpret benign commands as malicious ones.

- Another notable example includes the evasion of malware detection systems. Attackers craft malicious code that appears normal to humans but exploits vulnerabilities in ml-based malware detection models, bypassing their defenses.

By understanding adversarial attacks, their potential impacts, and real-world examples, we can better comprehend the importance of developing robust ml models. It becomes imperative to address these vulnerabilities to ensure the reliability and trustworthiness of ml systems in real-world applications.

Common Types Of Adversarial Attacks

Adversarial Machine Learning – Understanding Vulnerabilities In Ml Models

As machine learning continues to advance and be integrated into various applications, it is crucial to understand the vulnerabilities that exist within ml models. Adversarial attacks, in particular, pose a significant threat to the integrity and reliability of these models.

In this section, we will explore the common types of adversarial attacks and the underlying techniques behind them.

Gradient-Based Attacks

Gradient-based attacks exploit the gradients of the ml model to manipulate its predictions. Here are some key points to consider:

- Fast gradient sign method (fgsm): This attack involves perturbing the input data by adding a small perturbation in the direction of the gradient. By amplifying the gradient, the attacker can deceive the model into misclassifying the input.

- Projected gradient descent (pgd): Pgd is an extension of fgsm that introduces an additional step of projecting the perturbed input back onto a valid data space. This iterative process helps the attacker find more effective perturbations.

- Deepfool: Deepfool is another gradient-based attack that generates minimal perturbations to force misclassification. It computes linear approximations to the decision boundaries and iteratively adjusts the input to cross these boundaries.

Evasion Attacks

Evasion attacks, also known as poisoning attacks, aim to manipulate the training or testing data to mislead the ml model. Here are some key points to consider:

- Adversarial patch: Adversarial patches are specifically designed patterns placed strategically on an object to fool object detection systems. These patches exploit the weaknesses of the model, causing it to misclassify the object.

- Obfuscated gradients: Attackers can introduce perturbations to the input during training to evade detection. By manipulating the gradients, they can fool the model into accepting adversarial inputs as legitimate.

- Spatial transformation: Spatial transformations involve distorting the input image to cause misclassification. These transformations can include resizing, cropping, or rotating the image to confuse the ml model.

Poisoning Attacks

Poisoning attacks aim to introduce malicious data into the training dataset, leading the model to learn incorrect patterns. Here are some key points to consider:

- Data injection: Attackers insert carefully crafted malicious samples into the training set, influencing the model’s decision boundaries. These inserted samples can be strategically selected to target specific classes.

- Data manipulation: Instead of injecting new samples, attackers can manipulate the existing training samples, altering their features or labels. This manipulation can lead the model to learn incorrect associations and make erroneous predictions.

Trojan Attacks

Trojan attacks involve inserting a backdoor or trigger into the ml model during its training phase. Here are some key points to consider:

- Model watermarking: Attackers can embed hidden triggers or backdoors into the model’s parameters. These triggers remain dormant until they encounter specific input patterns or signals, triggering malicious behavior.

- Data poisoning: Attackers can inject poisoned data into the training set, which influences the model’s learned patterns. The backdoor is activated when the trained model encounters data containing the poison.

Understanding these common types of adversarial attacks is crucial for ensuring the security and reliability of ml models. By being aware of these vulnerabilities, researchers and developers can work towards developing robust defenses and mechanisms to safeguard ml systems from potential threats.

Detecting And Mitigating Adversarial Attacks

Adversarial Machine Learning – Understanding Vulnerabilities In Ml Models

Adversarial attacks pose a significant threat to machine learning models, making it crucial to detect and mitigate these attacks effectively. By understanding the techniques for detecting adversarial attacks, implementing adversarial training, and acknowledging the limitations of current detection and mitigation methods, we can enhance the robustness of ml models and protect them from potential vulnerabilities.

Techniques For Detecting Adversarial Attacks

To ensure the detection of adversarial attacks, several techniques can be employed. These include:

- Density-based outlier detection: By analyzing the density of data points, outlier detection algorithms can identify anomalies that may signal an adversarial attack.

- Feature squeezing: This technique reduces the number of unique features in input examples, making it harder for adversaries to perturb them while maintaining similar outputs.

- Robust statistical techniques: Ml models can utilize robust statistical techniques such as median absolute deviation (mad) to detect and mitigate adversarial attacks effectively.

- Intrinsic dimensionality: Examining the intrinsic dimensionality of the input space can help identify adversarial samples with artificially added dimensions.

Adversarial Training And Robust Ml Models

Adversarial training is a crucial strategy to enhance the robustness of ml models against adversarial attacks. By integrating adversarial examples during the training process, models can learn to withstand variations and attempt to minimize the impact of adversarial perturbations. Key points related to adversarial training and robust ml models include:

- Incorporating fgsm (fast gradient sign method) during training allows models to generate adversarial examples at each iteration, making them more robust against potential attacks.

- Defensive distillation is another technique that involves training a model on outputs produced by a softened version of itself. This approach increases the model’s resistance to adversarial perturbations.

- Ensemble techniques, involving the convergence of predictions from multiple models, can enhance the model’s ability to identify and mitigate adversarial attacks effectively.

Limitations Of Current Detection And Mitigation Methods

While several techniques exist for detecting and mitigating adversarial attacks, it is essential to acknowledge their limitations to address potential gaps. The limitations include:

- Transferability of attacks: Adversarial attacks crafted for one model can often be transferred and successfully deployed against other similar models, making them vulnerable despite detection efforts.

- High-dimensional attacks: Adversarial attacks designed in high-dimensional input spaces pose a considerable challenge for detection methods, as identifying subtle perturbations becomes increasingly difficult.

- Black-box attacks: Adversaries can launch attacks on models without having complete knowledge of their architecture or internal workings, making detection and mitigation more complex.

By actively researching and implementing advanced techniques, we can continue to improve the detection and mitigation of adversarial attacks, ultimately enhancing the robustness of ml models. The ability to defend against such attacks becomes paramount as machine learning continues to play an increasingly pervasive role in various applications.

Future Directions In Adversarial Machine Learning

Advancements In Adversarial Attack Techniques

Adversarial attacks have become a pressing concern in the field of machine learning, as they exploit vulnerabilities within ml models to manipulate their behavior. Adversaries are constantly evolving their techniques to bypass defenses and gain unauthorized access to sensitive data or disrupt the functionality of ml systems.

Here are some key advancements in adversarial attack techniques:

- Transferability: Adversarial examples crafted to fool one ml model can often fool other similar models as well. This transferability property has raised concerns about the robustness of ml models in real-world scenarios.

- Black-box attacks: Adversaries are now finding ways to launch attacks against ml models without having access to their internal architecture or training data. This poses a significant challenge as adversaries can exploit models deployed in production systems without much knowledge about their internal workings.

- Evasion attacks: Evasion attacks aim to modify input data in such a way that the ml model misclassifies it. Adversaries use techniques like gradient-based optimization and genetic algorithms to find the optimal perturbations that can fool the model.

- Backdoor attacks: Backdoor attacks involve injecting a hidden trigger into the ml model during the training process. This trigger remains dormant until a specific input pattern is observed, triggering malicious behavior. These attacks are particularly insidious as they can go unnoticed during model validation and testing.

Research And Development In Defensive Strategies

As adversaries continue to find innovative ways to exploit ml models, researchers and practitioners are actively developing defensive strategies to mitigate the impact of adversarial attacks. Here are some key areas of research and development in defensive strategies for adversarial machine learning:

- Adversarial training: By incorporating adversarial examples into the training process, ml models can learn to be more robust against adversarial attacks. This approach involves generating adversarial examples during the training phase and treating them as additional training data.

- Certified robustness: Certified robustness techniques aim to provide formal guarantees on a model’s robustness against adversarial attacks. These methods use mathematical proofs to establish bounds on the model’s vulnerability to perturbations in the input data.

- Model pruning and regularization: Regularization techniques like dropout and weight decay can help reduce the model’s vulnerability to adversarial attacks. By forcing the model to be less sensitive to small perturbations in the input data, the impact of adversarial examples can be minimized.

- Ensemble and diversity-based defenses: Using ensembles of diverse models can help increase the robustness of the ml system. Adversarial examples that can fool one model may fail to deceive others, thereby improving the overall security of the system.

Importance Of Collaboration And Knowledge Sharing In The Field

In the ever-evolving landscape of adversarial machine learning, collaboration and knowledge sharing play a crucial role in uncovering vulnerabilities, developing effective defenses, and staying one step ahead of adversaries. Here’s why collaboration is so important:

- Collective intelligence: By collaborating and sharing knowledge, researchers and practitioners can pool their expertise and knowledge to tackle complex challenges. Collaboration allows for a collective intelligence approach, where diverse perspectives and experiences contribute to the development of more robust defenses.

- Early detection and response: Collaboration allows for the early detection of new attack techniques and vulnerabilities. By sharing information about attacks and vulnerabilities, the ml community can quickly develop and deploy countermeasures to mitigate the impact of these threats.

- Benchmarking and evaluation: Collaboration enables the establishment of standardized benchmark datasets and evaluation metrics. By evaluating the performance of different defense strategies on common datasets, researchers can objectively compare and improve the state-of-the-art defenses.

- Educating the community: Collaboration and knowledge sharing initiatives help educate the ml community about the evolving threat landscape. By disseminating information about attack techniques, defense strategies, and best practices, researchers and practitioners can empower others to develop more resilient ml models.

The advancements in adversarial attack techniques and the ongoing research and development in defensive strategies illustrate the dynamic nature of the field of adversarial machine learning. Collaboration and knowledge sharing are vital for staying ahead of adversaries and ensuring the robustness and security of ml models in the face of emerging threats.

Frequently Asked Questions Of Adversarial Machine Learning – Understanding Vulnerabilities In Ml Models

What Is Adversarial Machine Learning?

Adversarial machine learning is the study of vulnerabilities in ml models and techniques to defend against them.

How Can Adversarial Attacks Be Harmful For Ml Models?

Adversarial attacks can cause ml models to make incorrect predictions, leading to potential security risks or misinformation.

What Are The Common Techniques Used In Adversarial Machine Learning?

Common techniques in adversarial machine learning include perturbation-based attacks, generative adversarial networks, and defensive distillation.

How Can Ml Models Be Protected Against Adversarial Attacks?

Ml models can be protected against adversarial attacks by incorporating defense mechanisms like adversarial training and input sanitization.

What Are The Real-World Implications Of Adversarial Machine Learning?

Adversarial machine learning has implications in various domains such as cybersecurity, autonomous vehicles, and online fraud detection, impacting our daily lives.

Conclusion

Adversarial machine learning is a cutting-edge field that highlights the vulnerabilities in ml models. By exploiting these weaknesses, adversaries can manipulate model outputs and cause potential harm. The importance of understanding these vulnerabilities cannot be understated, as ai systems are becoming increasingly integrated into our daily lives.

One key takeaway from this exploration is the crucial role of data integrity. Adversaries can inject malicious input to deceive ml models, emphasizing the need for robust data validation and preprocessing techniques. Additionally, the significance of continually monitoring the model’s performance and upgrading its defenses cannot be overlooked.

While advancements in adversarial machine learning pose challenges, they also open new avenues for enhancing model resilience. Researchers are actively seeking novel defense mechanisms that can detect and mitigate adversarial attacks effectively. As we move forward, it is imperative to strike a balance: creating powerful ml models while being aware of their inherent vulnerabilities.

By staying up-to-date with the latest research and prioritizing security measures, we can collectively mitigate the risks associated with adversarial machine learning and foster a safer ai ecosystem.