Contrastive learning is a method used to generate self-supervised representations. This approach aims to optimize the similarity between augmented versions of the same image while maximizing the dissimilarity with other images.

In recent years, contrastive learning has gained attention in the field of deep learning due to its ability to learn useful representations from unlabeled data. By utilizing contrastive learning, models can effectively discover underlying patterns and structures in the data, which can later be utilized for various downstream tasks such as classification, object detection, and segmentation.

This article will provide an overview of contrastive learning for self-supervised representations, explore its advantages, and discuss its applications in the field of machine learning and computer vision.

Credit: www.frontiersin.org

Introducing Contrastive Learning

Contrastive Learning For Self-Supervised Representations

What Is Contrastive Learning?

Contrastive learning is an approach in machine learning that allows for the learning of meaningful representations from unlabeled data. Unlike supervised learning, where labeled data is used to train models, contrastive learning leverages a self-supervised framework. In this framework, the model learns to identify similarities and differences between examples within the data, thereby creating representations that capture the underlying structure.

By contrasting positive pairs (similar examples) with negative pairs (dissimilar examples), the model learns to effectively encode the data’s features.

How Does Contrastive Learning Work?

Contrastive learning operates by framing the learning task as a binary classification problem. Given an anchor image, the model is trained to discriminate between positive examples (images of the same class) and negative examples (images of different classes). To accomplish this, the representations of the anchor and positive image are encouraged to be similar, while the representations of the anchor and negative image are encouraged to be dissimilar.

By optimizing the model to correctly distinguish between positive and negative pairs, it learns to capture informative and discriminative features that can be generalized to other tasks. In essence, contrastive learning enables the model to discover similarities and differences in the data distribution.

Key points:

- Contrastive learning is a self-supervised framework that learns from unlabeled data.

- Positive and negative examples are used to train the model.

- The model is optimized to differentiate between positive and negative pairs.

- The learned representations capture meaningful features and generalize to other tasks.

Why Is Contrastive Learning Important For Self-Supervised Representations?

Contrastive learning plays a crucial role in developing self-supervised representations for various reasons:

- Harnessing unlabeled data: By utilizing unlabeled data, contrastive learning allows for the extraction of meaningful representations without the need for manually labeled data. This helps when labeled data is scarce or expensive to obtain.

- Learning rich representations: Contrastive learning optimizes the model to capture high-level semantics and meaningful structures in the data. This enables the model to learn more informative representations that can be leveraged for downstream tasks such as image classification, object detection, and natural language processing.

- Generalization to different domains: Contrastive learning ensures that the learned representations are general and transferable to different domains and tasks. The model learns to focus on the intrinsic properties of the data, rather than relying on specific labels. This allows for robust performance across a wide array of applications.

- Exploration of latent space: By contrasting positive and negative examples, contrastive learning encourages the model to explore the latent space in search of meaningful patterns and relationships within the data. This exploration facilitates the discovery of nuanced representations that may not be explicitly labeled or annotated.

Contrastive learning is a powerful technique for developing self-supervised representations from unlabeled data. By leveraging the similarities and differences present within the data, contrastive learning enables the model to learn informative and transferable features. This approach is essential for various machine learning tasks and has proven to be effective across different domains.

Benefits Of Contrastive Learning For Self-Supervised Representations

Contrastive learning has emerged as a powerful technique for training self-supervised representations in machine learning. By leveraging the relationships between similar and dissimilar data points, contrastive learning enables models to learn rich and meaningful representations without the need for labeled data.

This blog post explores the various benefits that contrastive learning offers for self-supervised representations.

Enhanced Representation Learning

Contrastive learning enhances the process of representation learning by encouraging models to focus on the inherent structure and patterns within the data. Through the use of contrastive loss functions, the model learns to differentiate between positive and negative pairs of samples, thereby capturing the underlying semantic relationships present in the data.

This enhanced representation learning leads to the discovery of more informative and discriminative features.

- Contrastive learning enables the model to learn high-level abstractions from raw data.

- By focusing on similarity and dissimilarity, contrastive learning promotes the extraction of meaningful features.

- The enhanced representation learning improves the model’s ability to capture complex patterns and relationships within the data.

Improved Feature Extraction

One of the key benefits of contrastive learning is its ability to improve feature extraction. By encouraging the model to learn meaningful representations, contrastive learning facilitates the extraction of more discriminative and compact features. This helps in reducing the dimensionality of the input space, making it easier for downstream tasks to utilize these features.

- Contrastive learning aids in identifying and encoding relevant information from the input data.

- The improved feature extraction enables the model to capture fine-grained details and subtle differences between samples.

- By learning more informative features, contrastive learning enhances the model’s ability to generalize to unseen data.

Increased Model Performance And Generalization

Contrastively trained representations have shown impressive improvements in model performance and generalization across various tasks and domains. This is mainly attributed to the enhanced representation learning and improved feature extraction capabilities provided by contrastive learning.

- Models trained with self-supervised contrastive learning have achieved state-of-the-art results in a wide range of computer vision and natural language processing tasks.

- The learned representations generalize well to unseen data, leading to better performance on downstream tasks.

- Contrastively trained models exhibit improved robustness to variations and perturbations in the data.

Contrastive learning for self-supervised representations offers several notable benefits. It enhances representation learning, improves feature extraction, and enables models to achieve higher performance and better generalization. By leveraging the relationships within data, contrastive learning empowers models to learn more meaningful and discriminative representations, ultimately leading to improved performance across a variety of tasks.

Techniques And Methods For Contrastive Learning

Contrastive Learning For Self-Supervised Representations

Contrastive learning is a powerful technique in the field of self-supervised learning that enables the creation of useful representations from unlabeled data. By leveraging the relationships between different examples, contrastive learning allows us to learn rich representations that capture meaningful information.

In this section, we will explore some popular techniques and methods used in contrastive learning.

Siamese Networks

Siamese networks are a key component in contrastive learning. They consist of two identical neural networks, often referred to as “twins,” that share the same architecture and weights. These twins process two different samples and produce embeddings as outputs. By comparing the embeddings, siamese networks enable us to measure the similarity or dissimilarity between two samples.

This comparison is crucial in the contrastive learning framework, as it helps in optimizing the representations to capture relevant information.

Infonce Loss

The infonce (information noise contrastive estimation) loss is a commonly used loss function in contrastive learning. It is designed to maximize the agreement between positive pairs and minimize the agreement between negative pairs. In simple terms, it encourages the model to correctly identify similar examples while differentiating dissimilar ones.

By minimizing the infonce loss, we can train the model to improve the quality of the learned representations.

Momentum Contrast

Momentum contrast is another popular approach in contrastive learning that focuses on creating a stronger alignment between positive pairs. It introduces a momentum encoder to generate a moving average of the model’s weights. This momentum encoder helps in creating a more stable and consistent representation space.

By incorporating momentum contrast into the training process, the model can effectively leverage the long-term information and achieve better results.

Simclr

Simclr (simple contrastive learning of representations) is a framework that has gained significant attention in the field of contrastive learning. It combines multiple techniques, such as siamese networks, infonce loss, and data augmentations, to create powerful representations. Simclr leverages the power of contrastive learning to enable effective transfer learning, where a model trained on a large dataset can be fine-tuned on smaller labeled datasets for specific tasks.

This approach has shown impressive results in various domains, including computer vision and natural language processing.

Contrastive learning techniques provide effective ways to learn representations from unlabeled data. By utilizing siamese networks, infonce loss, momentum contrast, and frameworks like simclr, researchers and practitioners can harness the power of contrastive learning to unlock the potential of self-supervised learning.

These techniques pave the way for advancements in various fields where high-quality representations play a crucial role.

Applications Of Contrastive Learning In Various Domains

Contrastive Learning For Self-Supervised Representations

Contrastive learning is a powerful technique in the field of self-supervised learning that has gained popularity in recent years. This approach aims to learn useful representations by contrasting positive samples with negative samples. By leveraging the inherent structure in the data, contrastive learning has proven to be effective in various domains.

In this section, we will explore the applications of contrastive learning in natural language processing, computer vision, audio analysis, and reinforcement learning.

Natural Language Processing

- Contrastive learning has shown promising results in natural language processing (nlp) tasks.

- By learning representations through contrastive loss, nlp models can capture semantic relationships and improve performance.

- It enables models to understand nuances in language, such as word meanings, sentence structures, and contextual dependencies.

- Applications of contrastive learning in nlp include text classification, sentiment analysis, named entity recognition, and machine translation.

Computer Vision

- Contrastive learning has revolutionized the field of computer vision by enabling models to learn meaningful representations from unlabeled image data.

- It facilitates the understanding of visual concepts, object recognition, and image similarity.

- By contrasting positive and negative samples, models can grasp visual similarities and differences, leading to improved accuracy.

- Applications of contrastive learning in computer vision include image classification, object detection, image segmentation, and image generation.

Audio Analysis

- Contrastive learning has also been applied to audio analysis tasks, unlocking valuable insights from unlabeled audio data.

- By contrasting similar and dissimilar audio samples, models can learn representations that capture audio characteristics like pitch, timbre, and rhythm.

- It can be used for tasks such as audio classification, sound event detection, speaker recognition, and music recommendation.

Reinforcement Learning

- Contrastive learning has shown promise in the field of reinforcement learning, offering a new perspective on representation learning in sequential decision-making tasks.

- By contrasting positive and negative state-action pairs, models can learn useful representations that capture the underlying dynamics of the environment.

- It enables agents to generalize their knowledge across different states and actions, leading to better decision-making and performance.

- Applications of contrastive learning in reinforcement learning include game playing, robotics, and autonomous vehicle control.

Contrastive learning has emerged as a powerful framework for self-supervised learning and has made significant contributions across a range of domains. By leveraging the contrastive loss function, models can learn meaningful representations that capture the underlying structure in the data.

Whether it is in natural language processing, computer vision, audio analysis, or reinforcement learning, contrastive learning offers exciting possibilities for advancing the capabilities of ai systems.

Frequently Asked Questions Of Contrastive Learning For Self-Supervised Representations

How Does Contrastive Learning Work?

Contrastive learning is a self-supervised learning method that learns representations by comparing similar and dissimilar instances.

What Are The Advantages Of Self-Supervised Representations?

Self-supervised representations offer numerous benefits, including improved transfer learning, reducing the need for labeled data, and enhanced performance in downstream tasks.

What Are The Applications Of Contrastive Learning?

Contrastive learning finds applications in various fields such as computer vision, natural language processing, speech recognition, and recommendation systems.

How Does Contrastive Learning Improve Transfer Learning?

Contrastive learning leverages a large amount of unlabeled data to train a model, enabling it to capture valuable features that can be efficiently transferred to other tasks.

How Does Contrastive Learning Reduce The Need For Labeled Data?

By training on unlabeled data, contrastive learning bypasses the reliance on expensive labeled data, making it a cost-effective solution for various machine learning tasks.

Conclusion

Contrastive learning has emerged as a highly effective method for training self-supervised representations. By leveraging the power of positive and negative examples, this approach allows models to learn meaningful representations without the need for explicit annotations. Through maximizing the similarity between augmented views of the same input and minimizing the similarity between different inputs, contrastive learning encourages the model to capture relevant information and discard irrelevant variations.

As a result, it enables the model to generalize well to new tasks and domains. The versatility of contrastive learning has been demonstrated across various domains, including image classification, natural language processing, and even reinforcement learning. It has shown promising results in boosting performance and reducing the reliance on large labeled datasets.

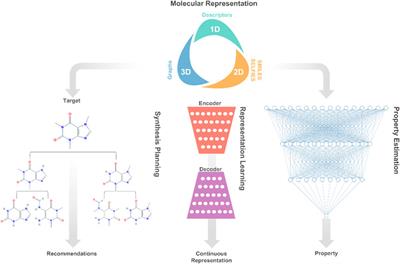

The potential applications of self-supervised representations are vast, ranging from improving recommendation systems and search engines to advancing medical imaging and drug discovery. As machine learning and artificial intelligence continue to evolve, contrastive learning offers an exciting avenue for further exploration and innovation.

By harnessing the power of self-supervised learning, we are moving closer to creating models that can learn from vast amounts of unlabeled data, ultimately leading to more efficient and intelligent systems.