Distributed training of neural networks is a fundamental concept that involves training deep learning models across multiple machines or devices using parallel processing. It enhances performance, allows for faster model training, and can handle large datasets efficiently.

There are various tools available for distributed training, such as tensorflow, pytorch, and horovod, which provide frameworks and libraries to facilitate distributed computing and optimization. These tools enable seamless integration with existing deep learning frameworks and offer features like model synchronization, data parallelism, and gradient aggregation.

With the increasing size and complexity of deep learning models, distributed training has become an essential approach to achieve better results in various fields, including computer vision, natural language processing, and reinforcement learning. By leveraging the power of distributed systems, researchers and practitioners can accelerate model training and achieve state-of-the-art performance.

Credit: www.slideteam.net

Introduction To Distributed Training Of Neural Networks

Distributed Training Of Neural Networks: Unlocking The Power Of Collaboration

Deep learning has revolutionized the field of artificial intelligence, enabling machines to perform complex tasks with remarkable accuracy. However, training neural networks to achieve such proficiency demands enormous computational resources and time. Here is where distributed training comes into play.

In this section, we will delve into the definition, importance, advantages, and challenges of distributed training for neural networks, providing you with a comprehensive overview of this fundamental technique.

Definition Of Distributed Training And Its Importance In Deep Learning

- Distributed training involves the concurrent training of a neural network across multiple devices, such as gpus or even multiple machines. This approach allows us to harness the collective power of these devices, accelerating the training process.

- It plays a crucial role in deep learning by addressing the limitations of training on a single device, enabling the use of more significant datasets, larger models, and reducing training time significantly.

Advantages And Challenges Of Distributed Training

Advantages:

- Improved scalability: Distributing the training process enables seamless scalability, allowing neural networks to handle larger and more complex datasets.

- Faster convergence: By utilizing multiple devices, distributed training facilitates quicker convergence of the neural network model, reducing the overall training time.

- Enhanced model performance: By training on diverse subsets of data, distributed training can lead to higher generalization and improved overall model performance.

Challenges:

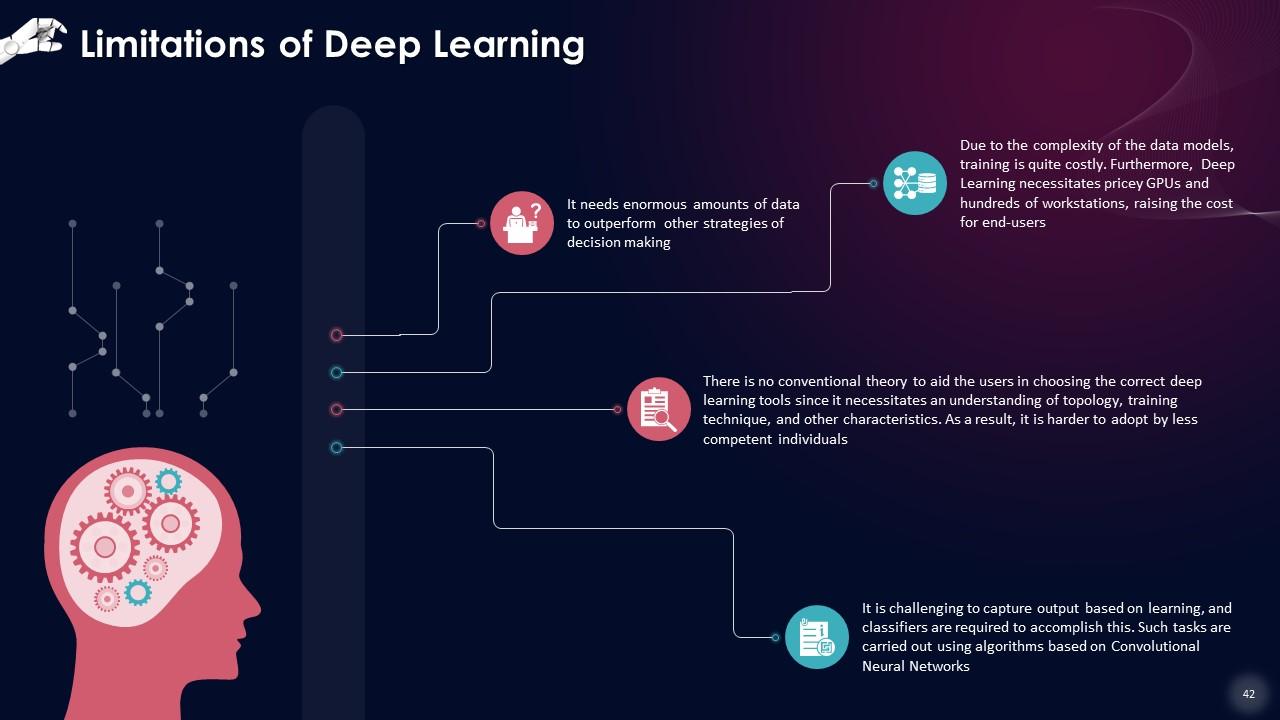

- Communication overhead: Coordinating the computations and transferring the model updates across the distributed devices introduces additional communication overhead, which can impact training efficiency.

- Synchronization complexities: Ensuring the synchronization of gradients and model parameters across devices requires careful coordination, as the parallel nature of distributed training can introduce synchronization challenges.

- Infrastructure setup and management: Setting up and managing the distributed infrastructure can be complex and requires expertise in distributed computing platforms and system administration.

Overview Of Fundamental Concepts And Techniques

- Data parallelism: This technique involves splitting the training data across multiple devices, where each device processes a subset of the data simultaneously. The gradients are then aggregated to update the model parameters.

- Model parallelism: In this approach, the model itself is divided across multiple devices, with each device responsible for computing a specific part of the model during the forward and backward passes.

- Parameter server architecture: This architecture involves separating the model parameters and gradients, distributing them across multiple devices, and utilizing parameter servers for coordination and aggregation.

To optimize distributed training, various tools and frameworks, such as tensorflow, pytorch, and horovod, have been developed to handle the complexities of distributed computing and facilitate efficient collaborative training.

Distributed training encompasses the combination of technology, algorithms, and computational resources to push the boundaries of deep learning. Its applications span across fields like image recognition, natural language processing, and recommendation systems. By leveraging the power of distributed training, we unlock new possibilities for neural networks, propelling us into a future where intelligent systems continue to evolve and achieve remarkable feats.

Key Tools For Distributed Training

Distributed Training Of Neural Networks – Fundamentals & Tools

Distributed training has become essential in the field of deep learning to handle large-scale datasets and complex neural networks. By leveraging the power of multiple machines or gpus, distributed training allows for faster and more efficient training, enabling the exploration and development of more sophisticated models.

In this section, we will explore some of the key tools for distributed training, including tensorflow, pytorch, horovod, and distributed tensorflow. We will also touch upon the different approaches of data parallelism and model parallelism in distributed training.

Tensorflow: A Powerful Framework For Distributed Training

Tensorflow is one of the most popular frameworks for distributed training due to its robustness and scalability. Here are some key points about tensorflow’s capabilities:

- Tensorflow provides a high-level api, called tf.distribute, that simplifies the process of distributing training across multiple devices or machines.

- With tf.distribute, tensorflow automatically handles tasks such as partitioning the data, replicating the model, and aggregating gradients.

- Tensorflow supports a variety of communication strategies for distributed training, including parameter servers, all-reduce, and collective communication.

- Distributed tensorflow can scale efficiently across clusters of machines, allowing for training on massive datasets with millions or even billions of samples.

Pytorch: Leveraging Distributed Training Capabilities

Pytorch is another popular framework that provides powerful tools for distributed training. Here are some key points about pytorch’s capabilities:

- Pytorch supports distributed training through its torch.nn.dataparallel module, which wraps the model and parallelizes the forward and backward passes across multiple gpus.

- With dataparallel, pytorch handles the complexities of data parallelism, allowing users to train their models with ease.

- Pytorch also provides a torch.nn.parallel.distributeddataparallel module, which enables distributed training across multiple machines using the nccl library for inter-process communication.

- Distributed pytorch allows for efficient training on large-scale datasets and complex models, while providing flexibility and ease of use.

Horovod: Optimizing Deep Learning Across Distributed Systems

Horovod is a distributed training framework developed by uber that aims to optimize deep learning across distributed systems. Here are some key points about horovod:

- Horovod simplifies the process of distributed training by providing a simple interface that can be easily integrated with popular deep learning frameworks, including tensorflow, pytorch, and mxnet.

- It uses nvidia’s nccl library for efficient inter-gpu communication and mpi for inter-node communication, allowing for fast and scalable distributed training.

- Horovod supports both data parallelism and model parallelism, making it suitable for a wide range of distributed training scenarios.

- By leveraging horovod, developers can achieve near-linear scaling in terms of training speed when adding more gpus or machines.

Distributed Tensorflow: Scaling Neural Networks Efficiently

Distributed tensorflow is a variant of tensorflow that is specifically designed for distributed training. Here are some key points about distributed tensorflow:

- Distributed tensorflow follows a data parallelism approach, where the model is replicated across multiple devices or machines, and each replica processes a different batch of data in parallel.

- It employs a parameter server architecture to synchronize the model’s parameters across all replicas during training.

- Distributed tensorflow supports various communication strategies, including synchronous and asynchronous updates, to balance performance and convergence speed.

- By distributing the computation and data across multiple devices or machines, distributed tensorflow allows for efficient scaling of neural networks, enabling the training of large-scale models.

Data Parallelism Vs. Model Parallelism: Choosing The Right Approach

When it comes to distributed training, there are two main approaches: data parallelism and model parallelism. Here are some points to consider when choosing between these approaches:

Data parallelism:

- In data parallelism, the model is replicated across devices or machines, and each replica processes a separate batch of data independently.

- Data parallelism is suitable for models with a large number of parameters and when the training data can be easily divided into smaller batches.

- It allows for faster training as each replica performs forward and backward passes simultaneously.

- However, data parallelism requires efficient inter-device or inter-node communication to synchronize the gradients and update the model parameters.

Model parallelism:

- In model parallelism, the model is divided into segments, and each segment is processed on a separate device or machine.

- Model parallelism is suitable for models with a large memory footprint or complex architectures that cannot fit into a single device’s memory.

- It allows for training larger models that cannot be trained using data parallelism alone.

- However, model parallelism requires careful synchronization of the gradients and model updates across different segments, which can be challenging in practice.

Choosing the right approach depends on the specific requirements of the model, the computational resources available, and the scalability needs of the training process.

By leveraging powerful tools like tensorflow, pytorch, horovod, and distributed tensorflow, developers can effectively distribute and optimize the training of neural networks, enabling breakthroughs in deep learning research and applications.

Fundamentals Of Distributed Training

Distributed Training Of Neural Networks – Fundamentals & Tools

Distributed training of neural networks is becoming increasingly popular due to its effectiveness in handling large-scale datasets and complex models. By leveraging multiple computational resources, it allows for faster training and improved model performance. In this section, we will delve into the fundamentals of distributed training, exploring key concepts and techniques that make it possible.

Let’s explore the following areas:

Data Partitioning: Dividing Training Data Across Multiple Nodes

- The training data is divided into subsets and distributed across multiple nodes.

- Each node processes a portion of the data independently, reducing the computational load.

- Enables parallel processing, accelerating the training process.

- Data partitioning can be performed randomly, sequentially, or based on certain criteria.

Model Parallelism: Breaking Down Models Into Smaller Sub-Parts

- In model parallelism, a model is divided into smaller sub-parts that can be trained independently.

- Each node focuses on training a specific sub-part of the model.

- Allows for parallel processing and efficient utilization of computational resources.

- Sub-parts can be connected through inter-node communication to pass information during training.

Synchronous Vs. Asynchronous Training: Pros And Cons

- Synchronous training ensures that all nodes wait for each other to complete a particular step before proceeding.

- Pros: Ensures consistency and guarantees convergence.

- Cons: Slower compared to asynchronous training due to synchronization overhead.

- Asynchronous training allows nodes to independently progress through training steps.

- Pros: Faster training as nodes don’t wait for each other.

- Cons: Lack of synchronization may lead to suboptimal convergence.

Gradient Aggregation: Combining Gradients From Different Nodes

- During distributed training, gradients computed by different nodes need to be combined.

- Different techniques are used for gradient aggregation, such as averaging or weighted merging.

- Aggregating gradients ensures that the model effectively learns from all subsets of the data.

- The aggregated gradient is then used to update the model parameters.

Communication Protocols: Ensuring Efficient Information Exchange

- Communication protocols facilitate the exchange of information between nodes.

- Efficient communication is crucial for distributed training performance.

- Different protocols, such as message passing interface (mpi) or tcp/ip, can be utilized.

- High-bandwidth and low-latency communication is preferred to minimize training time.

Distributed training of neural networks relies on the fundamentals discussed above – data partitioning, model parallelism, synchronous and asynchronous training, gradient aggregation, and communication protocols. These concepts form the basis for effective utilization of distributed computational resources, enabling faster and more scalable training of complex neural network models.

As we move forward, we will explore the tools and frameworks available that support distributed training and make the implementation easier and more efficient. Stay tuned for the next section!

Best Practices For Distributed Training

Distributed Training Of Neural Networks – Fundamentals & Tools

Scaling Strategies: Adding More Nodes For Increased Performance

- Scaling is a crucial aspect of distributed training, allowing us to achieve better performance by adding more computing resources. Here are some key points to consider when implementing scaling strategies:

- Distributed data parallelism: This approach involves splitting the data across multiple nodes and performing parallel computations on each subset of data. This allows for faster model training and improved performance.

- Model parallelism: In some cases, the model itself may be too large to fit into the memory of a single node. Model parallelism involves splitting the model across multiple nodes and performing parallel computations on each part. This enables training of large models efficiently.

- Efficient network communication: Communication overhead between nodes can be a bottleneck in distributed training. Employing efficient network communication techniques, such as using high-speed network interfaces and optimizing data transfer protocols, can significantly improve performance.

Fault Tolerance: Handling Failures In Distributed Systems

- In distributed training, failures are inevitable in large-scale systems. It is crucial to design fault-tolerant systems that can handle such failures seamlessly. Here are some key points to consider for fault tolerance in distributed training:

- Replication: By replicating data and model weights across multiple nodes, we can ensure that even if a node fails, the training can continue without interruption.

- Checkpointing: Periodically saving checkpoints of the model and training progress allows for easy recovery in the event of failures. This ensures that training can resume from the last checkpoint, minimizing the loss of progress.

- Failure detection and recovery: Implementing mechanisms to detect failures and automatically recover from them can help maintain the stability and continuity of distributed training.

Load Balancing: Distributing Workload Evenly Across Nodes

- Load balancing is essential to ensure that the workload is evenly distributed across all the nodes in a distributed training setup. Here are some key points regarding load balancing:

- Dynamic load balancing: With dynamic load balancing techniques, the system can adaptively distribute the workload based on the current load of each node. This ensures that no single node is overloaded, leading to more efficient resource utilization.

- Task scheduling: Efficient task scheduling algorithms can help evenly distribute the training workload across the available nodes. Techniques such as round-robin scheduling or proportional task allocation based on node capabilities can ensure fairness and optimal resource utilization.

Monitoring And Debugging: Tools And Techniques For Effective Troubleshooting

- Effective monitoring and debugging are essential to ensure the smooth operation of distributed training systems. Here are some key points to consider:

- Distributed log analysis: Collecting and analyzing logs from all the nodes can provide valuable insights into system behavior, identifying potential bottlenecks or issues.

- Performance profiling: Profiling tools can help identify performance bottlenecks and optimize the system for better performance. By analyzing the resource utilization and execution time of each component, we can identify areas for improvement.

- Distributed tracing: Tracing requests across the distributed system can help identify latency issues and performance bottlenecks. This allows for targeted optimizations to improve the overall system performance.

Efficient Data Preprocessing: Optimizing Data Transformations In Distributed Settings

- Data preprocessing is a critical step in neural network training. In distributed settings, optimizing data transformations becomes essential for efficient training. Here are some key points to consider:

- Data parallelism in preprocessing: When using data parallelism for training, preprocessing can also be parallelized to distribute the workload across nodes. This ensures better utilization of resources and faster data preparation.

- Preprocessing pipeline optimization: Streamlining the preprocessing pipeline, reducing redundant computations, and optimizing data loading and transformation operations can significantly improve training efficiency.

- Data partitioning: Ensuring that the data is partitioned efficiently across nodes can reduce data transfer overhead and improve overall training performance.

By following these best practices for distributed training, you can optimize the performance, fault tolerance, load balancing, monitoring, and data preprocessing in your neural network training workflows, enabling you to train models effectively and efficiently.

Frequently Asked Questions For Distributed Training Of Neural Networks – Fundamentals & Tools

How Does Distributed Training Of Neural Networks Work?

Distributed training of neural networks involves breaking down the training process among multiple machines to accelerate learning.

What Are The Benefits Of Distributed Training For Neural Networks?

Distributed training improves training speed, supports larger models and datasets, and enables scalability and fault tolerance.

Which Tools Can Be Used For Distributed Training Of Neural Networks?

Popular tools for distributed training of neural networks include tensorflow, pytorch, horovod, and microsoft cognitive toolkit.

What Are The Challenges In Distributed Training Of Neural Networks?

Challenges include communication overhead, synchronization issues, load imbalance, and handling the failure of individual workers.

How Can I Implement Distributed Training For My Neural Network Model?

To implement distributed training, you need to set up a cluster of machines, partition the data, choose a communication strategy, and utilize appropriate frameworks or libraries.

Conclusion

Distributed training of neural networks plays a crucial role in accelerating the training process and enhancing the performance of machine learning models. By distributing the workload across multiple devices or machines, both the computation and communication overhead are significantly reduced.

This allows for larger and more complex models to be trained efficiently, leading to improved accuracy and faster convergence. There are various tools and frameworks available to facilitate distributed training, such as tensorflow, pytorch, and horovod. These tools provide an infrastructure for parallelizing the training process and handling the distribution of data and gradient updates seamlessly.

As the demand for machine learning models continues to grow, it is crucial for researchers and industry professionals to leverage distributed training techniques to tackle larger datasets and more complex problems. The ability to train models quickly and effectively is essential for developing cutting-edge solutions across various domains, from computer vision to natural language processing.

The fundamentals and tools discussed in this blog post provide a solid foundation for anyone interested in exploring distributed training for neural networks. By employing these techniques, researchers and practitioners can unlock the true potential of machine learning and drive innovation in the field.