Multi-armed bandits balance exploration vs exploitation by using a strategy called “epsilon-greedy”. In this approach, the bandit algorithm randomly explores different options a certain percentage of the time, while also exploiting the option that has shown the highest reward so far.

This allows for a balance between gathering more information and maximizing immediate rewards. Multi-armed bandits are a class of machine learning algorithms that involve making decisions under uncertainty, with the goal of finding the best possible option or arm to maximize the total reward over time.

One of the key challenges in this type of problem is how to balance the exploration of new options with the exploitation of those that have shown promising results. This balance is crucial in order to find the optimal solution while avoiding unnecessary losses. We will explore how multi-armed bandits achieve this balance through the epsilon-greedy strategy. We will also discuss the advantages and limitations of this approach, as well as other techniques used to balance exploration and exploitation.

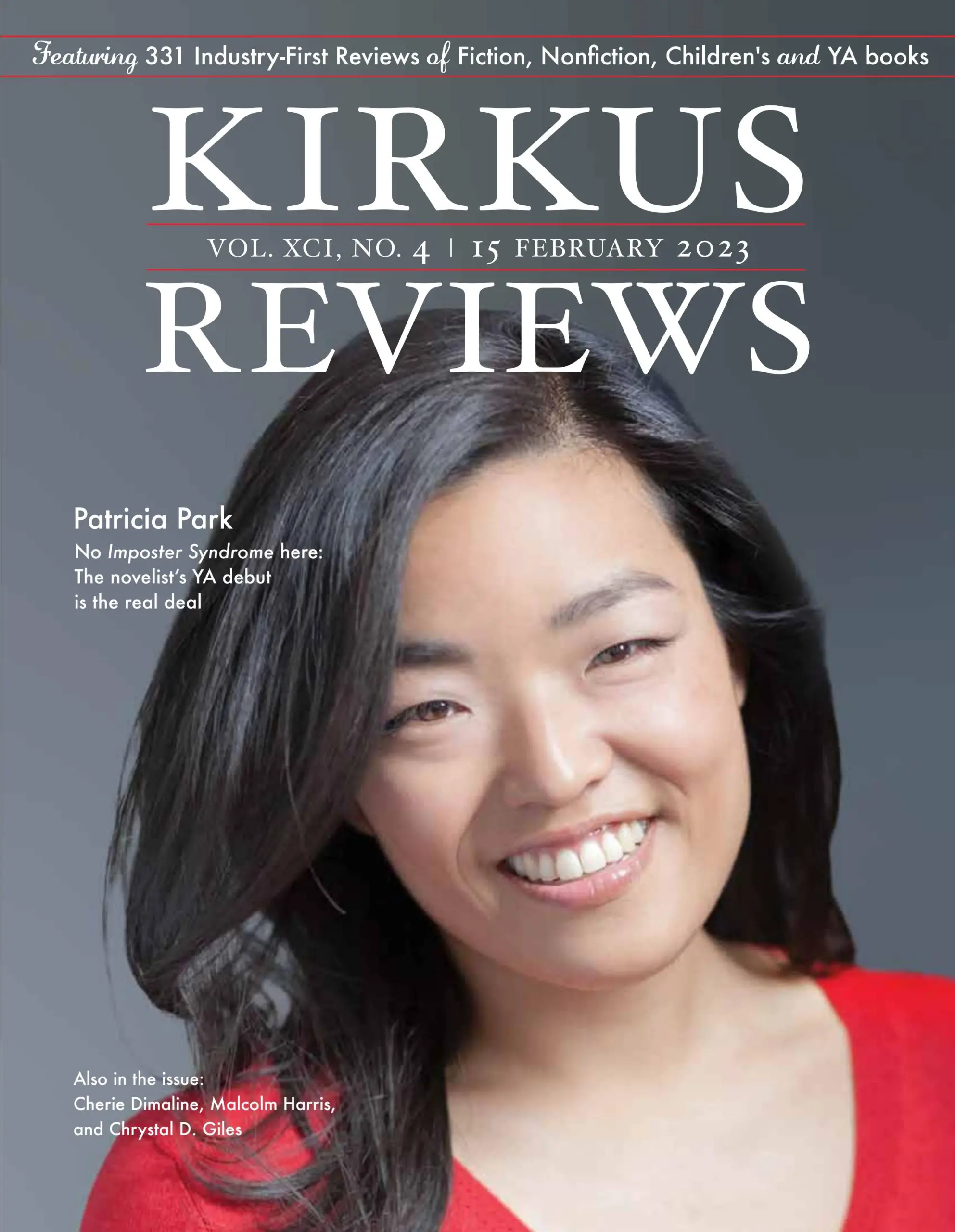

Credit: asia.nikkei.com

The Basics Of Multi-Armed Bandits

Are you curious about how multi-armed bandits strike a balance between exploration and exploitation? In this section, we will dive into the fundamentals of multi-armed bandits and understand how they work. Let’s explore the key aspects of this intriguing concept.

Introduction To Multi-Armed Bandits

Multi-armed bandits are a class of reinforcement learning algorithms that deal with the exploration-exploitation dilemma. They derive their name from the analogy of a gambler facing multiple slot machines, or one-armed bandits, lined up in a row. Each slot machine, or arm, has an unknown probability distribution that determines the likelihood of winning when played.

The goal of the gambler is to maximize their total winnings over time.

Explanation Of Exploration And Exploitation In Multi-Armed Bandits

To understand how multi-armed bandits strike a balance between exploration and exploitation, let’s take a closer look at these two concepts:

- Exploration:

- When faced with multiple arms, exploration refers to the act of trying out different arms to gather information about their rewards. This involves selecting arms that have not been extensively tested to obtain a better understanding of their potential payoffs.

- By exploring, the algorithm seeks to reduce uncertainty and gather knowledge about the arms that could lead to higher rewards in the long run.

- During the exploration phase, the algorithm dedicates a portion of its resources to collecting data by selecting arms at random or following a specific exploration strategy.

- Exploitation:

- Exploitation, on the other hand, involves selecting the arm that is expected to yield the highest reward based on the gathered knowledge.

- The algorithm exploits the information it has acquired during the exploration phase to maximize its cumulative reward.

- Exploitation balances the desire to make the most of the current knowledge with the potential for missing out on higher rewards that could be obtained by exploring lesser-known arms.

By dynamically adjusting the exploration-exploitation trade-off, multi-armed bandits attempt to maximize long-term rewards while minimizing the opportunity cost of exploration.

In this section, we delved into the basics of multi-armed bandits, providing an introduction to this unique concept and explaining the intricacies of exploration and exploitation. Understanding these fundamental concepts will lay a solid foundation for exploring how multi-armed bandits strike the delicate balance between exploration and exploitation.

So, let’s move forward and uncover more about this fascinating topic!

The Trade-Off: Exploration Vs Exploitation

Understanding The Trade-Off Between Exploration And Exploitation

In the realm of multi-armed bandit algorithms, striking the right balance between exploration and exploitation is crucial. This trade-off is at the heart of decision-making, where we must decide whether to explore new options or exploit existing knowledge. Let’s delve deeper into this intriguing dilemma and understand its significance in the context of multi-armed bandits.

Exploration:

- Exploring involves taking a chance and trying out different options to gather more information about their potential.

- During the exploration phase, a multi-armed bandit algorithm may select arms that have yielded limited rewards so far or arms whose potential has not been fully evaluated.

- By exploring, we can discover new opportunities and acquire knowledge about the various options available.

Exploitation:

- Exploitation focuses on exploiting the existing knowledge and choosing arms that have proven to be more rewarding in the past.

- This strategy aims to maximize the accumulated rewards by repeatedly selecting the arms that have shown promising results.

- Exploitation is based on the assumption that the best decision is to stick with what is known to be good until compelling evidence suggests otherwise.

Finding the right balance:

- Striking the right balance between exploration and exploitation is essential because going to either extreme can lead to suboptimal outcomes.

- If we solely focus on exploration, we may miss out on exploiting highly rewarding arms, resulting in missed opportunities for maximizing rewards.

- On the other hand, if we only exploit and neglect exploration, we may get stuck with suboptimal choices and fail to uncover better options.

Addressing uncertainty:

- Multi-armed bandit algorithms incorporate various strategies to address this exploration-exploitation dilemma, taking into account the inherent uncertainty in decision-making.

- These algorithms employ methods such as epsilon-greedy, ucb1, and thompson sampling to balance exploration and exploitation effectively.

- By dynamically adjusting the exploration rate or using uncertainty estimates, these algorithms adapt to the evolving landscape of rewards and information.

The role of rewards:

- Rewards play a crucial role in fine-tuning the balance between exploration and exploitation in multi-armed bandit algorithms.

- By incorporating the feedback from rewards, these algorithms incrementally update their understanding of the arms’ potential and adjust their decision-making accordingly.

- High rewards encourage exploitation, while low rewards trigger exploration to discover potentially more rewarding arms.

The significance of the exploration-exploitation trade-off:

- Striking the right balance between exploration and exploitation is crucial in various domains, including online advertising, clinical trials, and recommendation systems.

- In these scenarios, limited resources and time make it imperative to optimize decision-making and leverage the available information effectively.

- By understanding and managing the exploration-exploitation trade-off, we can make more informed decisions, maximize rewards, and continuously improve our strategies.

Remember, finding the optimal balance between exploration and exploitation requires careful consideration and constant adaptation. Multi-armed bandit algorithms provide powerful tools for navigating this trade-off and making the most of the available options.

Strategies For Exploration

How Do Multi-Armed Bandits Balance Exploration Vs Exploitation?

Exploration strategies in multi-armed bandits:

Multi-armed bandits are a class of algorithms used to balance the exploration of uncertain options (arms) with the exploitation of known high-performing options. Various strategies can be employed to achieve an optimal balance between exploration and exploitation. Here, we will discuss the exploration strategies used in multi-armed bandits.

Thorough Examination Of Epsilon-Greedy Strategy:

The epsilon-greedy strategy is one of the most commonly used exploration strategies in multi-armed bandits, which helps strike a balance between exploring new arms and exploiting the arms with the highest expected rewards. Key points about the epsilon-greedy strategy include:

- The strategy follows a simple rule of choosing the arm with the highest reward most of the time (exploitation) while occasionally exploring a random arm with a small probability (exploration).

- The exploration parameter, epsilon (ε), determines the probability of selecting a random arm. A higher epsilon value leads to more exploration, while a lower value focuses more on exploitation.

- The epsilon-greedy strategy is easy to implement and computationally efficient, making it a popular choice for many applications.

Discussing Other Popular Exploration Techniques:

Apart from the epsilon-greedy strategy, several other exploration techniques have been developed to enhance the performance of multi-armed bandit algorithms. These techniques include:

- Ucb1 (upper confidence bound 1) algorithm:

- Ucb1 balances exploration and exploitation by assigning upper confidence bounds to each arm based on its estimated reward and uncertainty.

- It explores arms with high potential rewards and high uncertainty, helping to gradually refine the estimates of arm rewards.

- Thompson sampling:

- Thompson sampling is a bayesian-based exploration strategy that maintains a distribution over the rewards of each arm.

- It samples from these distributions and selects the arm with the highest sampled reward, allowing for uncertainty and exploration.

- Bayesian bandits:

- Bayesian bandit algorithms use bayesian statistics to continuously update the belief about the rewards of each arm.

- These algorithms intelligently explore arms based on the posterior probability distribution, considering both prior knowledge and observed rewards.

- Softmax action selection:

- This strategy assigns probabilities to arms based on their expected rewards.

- It allows for exploration by selecting arms with lower expected rewards occasionally, while higher probability arms are mostly exploited.

Multi-armed bandit algorithms use exploration strategies to strike a balance between exploring uncertain arms and exploiting known high-reward arms. The epsilon-greedy strategy and other popular techniques, like ucb1, thompson sampling, bayesian bandits, and softmax action selection, offer different approaches to this crucial balancing act.

By employing these strategies, multi-armed bandits can optimize decision-making and adapt to changing environments.

Strategies For Exploitation

In multi-armed bandits, striking a perfect balance between exploration and exploitation is essential to maximize rewards. While exploration involves trying out different arms to gather information about their potential, exploitation focuses on utilizing the best performing arms to achieve maximum rewards.

In this section, we will delve into the various strategies employed for exploitation in multi-armed bandits and analyze the popular ucb1 algorithm. Additionally, we will highlight the advantages and limitations of different exploitation approaches.

Exploitation Strategies In Multi-Armed Bandits

There are several strategies that can be utilized to emphasize exploitation in multi-armed bandits:

- Greedy algorithm: This straightforward approach involves selecting the arm with the highest estimated expected reward at each step.

- Epsilon-greedy algorithm: This method combines exploration and exploitation by choosing the arm with the highest estimated expected reward most of the time, but occasionally exploring other arms with a small probability (epsilon).

- Softmax exploration: This strategy assigns probabilities to each arm based on their estimated expected rewards and chooses an arm according to these probabilities. Arms with higher estimated rewards have higher chances of being selected.

- Thompson sampling: This bayesian method samples arms according to their probabilities of being the best and selects the arm with the highest sample.

Analysis Of Ucb1 Algorithm For Exploitation

One of the well-known algorithms for exploitation in multi-armed bandits is the ucb1 algorithm. Ucb1 stands for upper confidence bound 1 and it balances exploration and exploitation by calculating upper confidence bounds for each arm based on confidence intervals. The algorithm selects the arm with the highest upper confidence bound at each step.

Here are some key points regarding the ucb1 algorithm:

- Ucb1 takes into account both the average reward obtained from an arm and the number of times the arm has been selected.

- It assigns a higher upper confidence bound to arms that have been explored less, encouraging exploration of each arm at the initial stages.

- As more data is gathered, the upper confidence bound for each arm decreases, allowing the exploitation of the arms with higher expected rewards.

Advantages And Limitations Of Different Exploitation Approaches

Different approaches to exploitation have their own advantages and limitations. Here is a breakdown:

- Greedy algorithm:

- Advantages: Simple and computationally efficient.

- Limitations: Can get stuck in suboptimal arms if exploration is insufficient.

- Epsilon-greedy algorithm:

- Advantages: Balances exploration and exploitation effectively.

- Limitations: May waste resources on unnecessary exploration.

- Softmax exploration:

- Advantages: Allows systematic exploration based on probabilities.

- Limitations: Requires tuning of temperature parameter, which affects the balance between exploration and exploitation.

- Thompson sampling:

- Advantages: Bayesian approach accounts for uncertainties and is robust against noise.

- Limitations: Can be computationally expensive and requires prior knowledge or assumptions about reward distributions.

Achieving the right balance between exploration and exploitation is crucial in multi-armed bandits. Various strategies such as the greedy algorithm, epsilon-greedy algorithm, softmax exploration, and thompson sampling offer different advantages and limitations in terms of exploiting the best-performing arms. The ucb1 algorithm, with its upper confidence bounds, provides a reliable method for exploiting arms in a multi-armed bandit setting.

By understanding and utilizing these strategies effectively, we can optimize our decision-making process and maximize rewards in various real-world applications.

Advanced Techniques For Exploration And Exploitation

In the world of multi-armed bandit problems, finding the right balance between exploration and exploitation is key to maximizing rewards. While basic strategies like epsilon-greedy and ucb help achieve this balance to some extent, advanced techniques take it a step further.

Let’s dive into two popular methods that enhance optimization results in multi-armed bandits: thompson sampling and bayesian exploration-exploitation.

Going Beyond Basic Strategies

Thompson sampling:

- The thompson sampling technique is rooted in bayesian inference and offers a probabilistic approach for exploration and exploitation in bandit problems.

- It starts with initializing prior distributions for the potential rewards of each arm.

- During each round, a random sample is drawn from each arm’s distribution.

- The arm with the highest sample value is chosen for exploitation, while the exploration continues by updating the distributions based on the observed rewards.

- This adaptive nature of thompson sampling allows it to dynamically allocate more trials to the arms with uncertain rewards, ensuring a balance between exploration and exploitation.

Bayesian exploration-exploitation:

- Bayesian exploration-exploitation is another advanced technique that leverages the bayesian framework for multi-armed bandits.

- It utilizes posterior distributions rather than point estimations of arm rewards.

- By maintaining these distributions, it continuously updates its knowledge about each arm’s underlying reward distribution.

- This information is then employed to make informed decisions on exploration and exploitation.

- The algorithm assigns higher probabilities to arms with potentially high rewards, directing more exploration towards the most promising options.

- As it gathers more data, it becomes increasingly confident in its estimates and shifts towards exploitation, maximizing cumulative rewards.

These advanced techniques go beyond basic strategies by incorporating probabilistic methods and continuously updating beliefs about arm rewards. By doing so, they strike a better balance between exploration and exploitation, leading to improved optimization results in multi-armed bandit problems. Whether through thompson sampling or bayesian exploration-exploitation, these approaches enhance the effectiveness of decision-making and provide valuable insights for maximizing rewards in a variety of real-world scenarios.

Real-World Applications

Applying Multi-Armed Bandits To Various Contexts

In order to optimize decision-making processes when faced with exploring new options or exploiting known ones, multi-armed bandits have found a multitude of real-world applications. Let’s explore some of the areas where these techniques have been successfully implemented.

Case Studies Showcasing Successful Implementation Of Exploration And Exploitation Techniques

Here are a few examples of how multi-armed bandits have been effectively utilized in different contexts:

- Online advertising optimization: Many online platforms use multi-armed bandit algorithms to determine how to allocate ad impressions among various advertisements. By continuously exploring new ads and exploiting those that generate the most desirable outcomes, companies can maximize their advertising revenue and improve user experience.

- Clinical trials: In the field of medicine, multi-armed bandits have been employed to efficiently allocate resources in clinical trials. By dynamically assigning patients to different treatment options based on ongoing results, researchers can expedite the discovery of effective treatments while minimizing potential harm to patients.

- Dynamic pricing: E-commerce platforms often employ multi-armed bandit algorithms to optimize their pricing strategies. By continuously exploring different price points and exploiting those that yield the highest conversion rates or profits, retailers can maximize their revenue while remaining competitive in a dynamic market.

- Website layout optimization: Multi-armed bandits can also be utilized to optimize website layouts and user interfaces. By exploring different design elements and exploiting those that lead to higher engagement rates or conversions, businesses can create more user-friendly and effective online experiences.

- Content recommendation: Online platforms that provide personalized content recommendations rely on multi-armed bandits to optimize the selection of articles, videos, or products to display to users. By balancing exploration of new items with exploitation of highly relevant ones, these platforms can enhance user satisfaction and engagement.

- Robotics and autonomous systems: Multi-armed bandits have applications in robotics and autonomous systems, where decision-making is crucial. For example, in robotic exploration scenarios, these algorithms can help robots efficiently search for valuable information by balancing exploration of unknown areas with exploitation of known rewards.

- A/b testing: Multi-armed bandit algorithms are used in the field of a/b testing to optimize the allocation of user traffic between different variations of a webpage or application. By dynamically assigning users to different versions and learning from their interactions, businesses can quickly identify the most successful options and improve their products or services.

These case studies highlight the versatility and effectiveness of multi-armed bandits in various industries and problem domains. By intelligently balancing exploration and exploitation, these techniques empower decision-makers to make informed choices and drive optimal outcomes.

Challenges And Considerations

Implementing multi-armed bandits comes with its fair share of challenges and considerations. It’s important to recognize these factors to ensure an effective balance between exploration and exploitation strategies. Here are some potential challenges to be aware of and key factors to consider:

Potential Challenges In Implementing Multi-Armed Bandits:

- Limited initial data: In the early stages of implementing a multi-armed bandit algorithm, there might be limited data available about the arms or options being tested. This can pose challenges in accurately determining the optimal strategy.

- Uncertainty in rewards: It can be difficult to precisely estimate the rewards associated with each arm, especially if the available data is sparse or noisy. The uncertainty in rewards can impact the decision-making process and hinder the exploration-exploitation balance.

- Exploration-exploitation trade-off: Striking the right balance between exploring new options (exploration) and exploiting the known good options (exploitation) is crucial in maximizing the overall performance. It’s important to find the optimal ratio between exploration and exploitation for the specific context.

Factors To Consider When Choosing Exploration And Exploitation Strategies:

- Time constraints: Depending on the time frame available, the emphasis on exploration or exploitation may need to be adjusted. If time is limited, a more exploitation-focused strategy might be preferred to maximize short-term gains.

- Risk tolerance: The level of risk that an organization is willing to accept can influence the choice between exploration and exploitation. If the stakes are high and there is a low tolerance for failure, a more exploitation-focused approach may be favored.

- Resource allocation: Consideration should be given to the allocation of resources for exploration and exploitation activities. It’s important to ensure that sufficient resources are dedicated to exploration to gather valuable insights without neglecting the exploitation of known good options.

- Contextual factors: The specific context in which the multi-armed bandit algorithm is being implemented should be taken into account. Factors such as market dynamics, user preferences, and competition can influence the optimal balance between exploration and exploitation.

The Role Of Experimentation In Optimizing Bandit Algorithms:

Experimentation plays a vital role in optimizing bandit algorithms. By continuously experimenting, collecting data, and refining the algorithm, it’s possible to improve performance and make better-informed decisions. Here are a few key points to consider:

- Experimentation allows for gathering data on the performance of different arms, helping to refine estimates of their rewards and reduce uncertainties.

- Through experimentation, the algorithm can dynamically adapt and adjust the exploration-exploitation balance based on the evolving rewards and insights gathered.

- Experimentation provides an opportunity to test different strategies and their impact on the overall performance, enabling the optimization of the bandit algorithm.

- Data-driven experimentation allows for iterating and fine-tuning the algorithm over time, leading to improved decision-making and better outcomes.

To excel in balancing exploration and exploitation in multi-armed bandits, organizations must address potential challenges, consider contextual factors, and leverage experimentation to optimize algorithms continuously. This dynamic process ensures the algorithm can adapt to changing conditions and deliver continuous performance improvements.

Frequently Asked Questions On How Do Multi-Armed Bandits Balance Exploration Vs Exploitation?

How Do Multi-Armed Bandits Strike A Balance Between Exploration And Exploitation?

Multi-armed bandits use a strategic algorithm to decide when to explore new options and when to exploit known ones.

Can Multi-Armed Bandits Make Decisions Without Prior Knowledge?

Yes, multi-armed bandits have the ability to make intelligent decisions even when there is minimal prior knowledge.

What Is The Role Of Exploration In Multi-Armed Bandit Algorithms?

Exploration allows multi-armed bandits to gather information about different options to make better decisions in the long run.

How Does Exploitation Benefit Multi-Armed Bandit Models?

Exploitation helps multi-armed bandits maximize their rewards by focusing on options that have proven to be successful.

Are Multi-Armed Bandits Used In Real-World Scenarios?

Yes, multi-armed bandits are widely used in various applications like online advertising, clinical trials, and recommendation systems to optimize decision-making processes.

Conclusion

The usage of multi-armed bandits is a powerful approach to tackling the exploration-exploitation dilemma in various real-life scenarios. These algorithms strike a delicate balance between gathering new information and maximizing the overall rewards. By continuously adapting and learning from prior experiences, multi-armed bandits enable businesses to optimize their decision-making processes.

It is important to understand that exploration and exploitation are not mutually exclusive. In fact, they work hand in hand to inform and refine each other. Multi-armed bandits provide a systematic framework for businesses to make informed decisions by dynamically allocating resources to explore new options or exploit the currently known best choice.

By leveraging multi-armed bandits, companies can gain valuable insights, improve customer satisfaction, and achieve higher returns on investment. Moreover, the flexibility and adaptability of these algorithms make them applicable to a wide range of industries. From online advertising to clinical trials, multi-armed bandits offer a robust solution to the exploration-exploitation trade-off.

The utilization of multi-armed bandits empowers businesses to optimize their decision-making process by effectively managing exploration and exploitation, ultimately leading to better outcomes and improved success in an ever-evolving world.