Inside transformers – a deep dive into attention mechanisms provides a detailed exploration of how attention mechanisms work in transformers. This article delves into the inner workings of attention mechanisms, explaining their role in processing and understanding natural language.

With concise explanations and practical examples, readers gain a clear understanding of how attention mechanisms can improve language processing tasks such as machine translation and sentiment analysis. This deep dive into attention mechanisms uncovers the key concepts, architectures, and applications, making it a valuable resource for anyone seeking to understand and leverage this powerful ai technique.

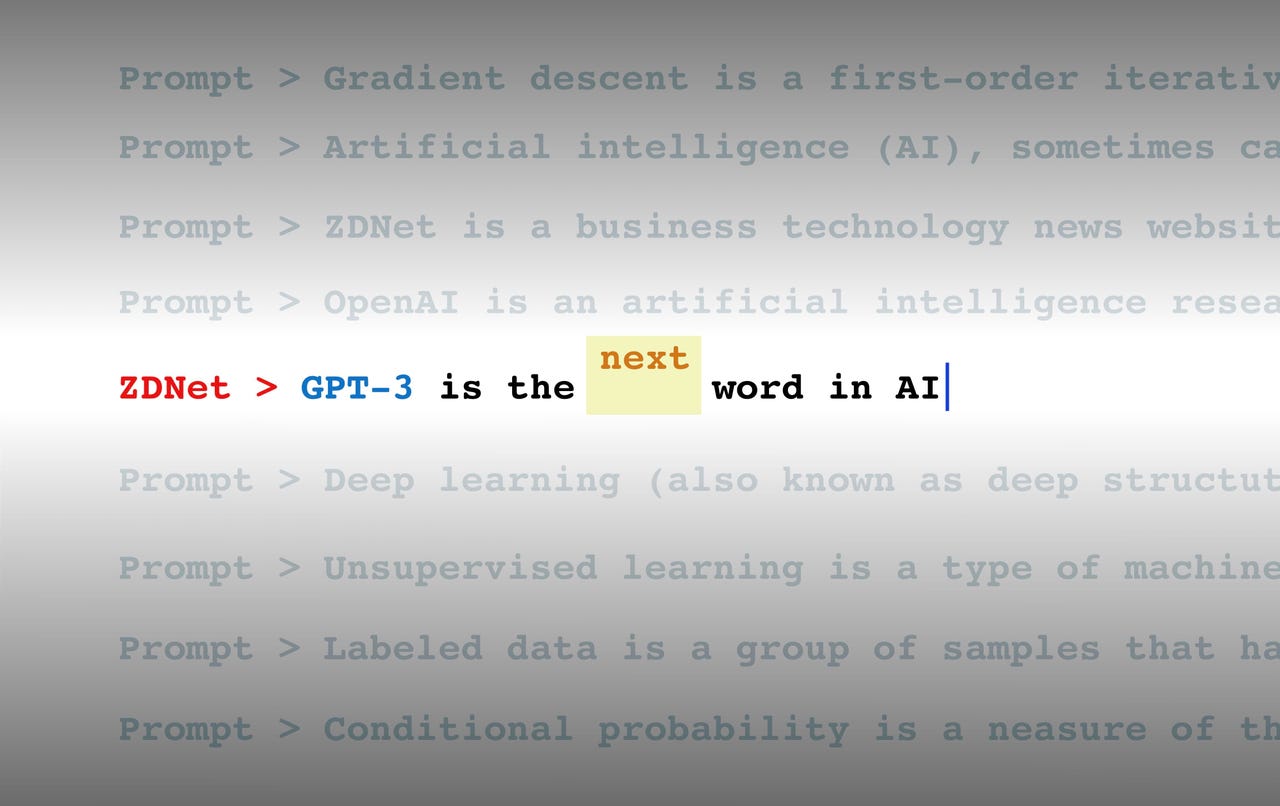

Credit: www.hodinkee.com

What Are Attention Mechanisms? Exploring Their Role In Transformers

Understanding The Fundamental Concept Of Attention Mechanisms

Attention mechanisms are a fundamental concept in the field of deep learning, particularly in the realm of transformer models. These mechanisms are inspired by human perception and enable neural networks to focus on specific parts of the input data while ignoring irrelevant information.

Here are some key points to help you grasp the concept of attention mechanisms:

- Attention mechanisms allow neural networks to selectively concentrate on different parts of the input sequence. This selective focus is similar to the way humans pay attention to certain aspects of their surroundings while filtering out distractions.

- Unlike traditional neural networks that process input sequentially, attention mechanisms enable parallel processing, as different parts of the input can be attended to simultaneously. This parallelism leads to improved efficiency and performance.

- Attention mechanisms rely on two important components: The query, which represents the information to be attended to, and the key-value pairs, which capture the relevant details in the input data.

- By calculating the similarity between the query and the keys, attention mechanisms determine how much weight or attention should be given to each value. This allows the model to dynamically adjust its focus on different parts of the input sequence.

- The attention weights assigned to each value are used to compute a weighted sum, which represents the attended information. This weighted sum is then combined with other inputs or processed further to produce the final output.

- Attention mechanisms are often visualized as heatmaps, where the intensity of color indicates the level of attention assigned to different positions in the input sequence.

How Attention Mechanisms Enable Information Sharing And Communication

Attention mechanisms play a crucial role in facilitating information sharing and communication between different parts of a neural network. Here’s a closer look at how this communication takes place:

- Attention mechanisms allow different parts of a neural network to exchange information by attending to relevant details in each other’s outputs. This helps in capturing dependencies and relationships between different elements of the input.

- When analyzing sequences or time series data, attention mechanisms enable the model to consider all the context information, rather than being limited to a fixed window of size or focusing only on the most recent inputs.

- By attending to different parts of the input sequence, attention mechanisms can capture long-range dependencies between distant elements. This is particularly important in tasks such as machine translation, where understanding the context of a word might require considering the entire sentence.

- Attention mechanisms also promote information flow in both directions. The attended information can influence the subsequent processing steps, enabling the model to make better predictions. Additionally, by attending to previous outputs, attention mechanisms can refine their understanding of the input during processing.

The Crucial Role Of Attention Mechanisms In Improving Performance And Efficiency

Attention mechanisms have revolutionized the field of deep learning by significantly improving the performance and efficiency of models like transformers. Here’s why attention mechanisms are crucial in these advancements:

- Attention mechanisms enable transformers to handle long sequences of input data more effectively. Traditional models struggle with long sequences as the processing time and computational resources required increase exponentially. Attention mechanisms alleviate these limitations by allowing the model to selectively focus on relevant parts of the input.

- By attending to different parts of the input sequence, attention mechanisms help transformers capture complex relationships and dependencies between various elements. This is crucial in tasks such as language understanding, where words in a sentence might influence each other’s meaning.

- Attention mechanisms enable transformers to handle inputs of varying lengths without relying on padding or truncation. This flexibility is particularly beneficial in natural language processing tasks where sentences can have different lengths.

- The self-attention mechanism, a specific type of attention used in transformers, enables the model to capture global dependencies efficiently. This helps in understanding the overall context of the input sequence and improves the quality of predictions.

- By attending to relevant information and ignoring irrelevant details, attention mechanisms improve the interpretability of deep learning models. This is essential in domains where explainability is critical, such as healthcare and finance.

Attention mechanisms are a fundamental concept in the realm of transformer models. They enable information sharing and communication between different parts of a neural network, leading to improved performance and efficiency. By understanding the concept and role of attention mechanisms, we can dive deeper into transformers and explore their unprecedented capabilities.

The Evolution Of Attention Mechanisms In Transformers

Tracing The History And Evolution Of Attention Mechanisms In Natural Language Processing Tasks

In the field of natural language processing (nlp), attention mechanisms have played a pivotal role in improving the performance of various tasks. Let’s dive into the history and evolution of attention mechanisms in nlp to understand their significance in the development of the transformer model.

- Early developments:

- Attention mechanisms were first introduced in nlp research as a means to tackle the challenge of capturing long-range dependencies within sequences of words.

- The use of attention mechanisms in tasks like machine translation showed promising results, highlighting their potential in improving language modeling.

- Attention mechanism in the transformer model:

- The transformer model, introduced by vaswani et al. In 2017, revolutionized the field of nlp with its attention-based architecture.

- Unlike traditional recurrent neural networks, the transformer employs self-attention mechanisms to process sequences of words in parallel, enabling efficient computation and capturing global dependencies.

- The self-attention mechanism allows the model to focus on relevant parts of the input sequence while generating each word in the output, adapting the importance of different words dynamically.

- Enhanced performance and flexibility:

- Attention mechanisms in the transformer model provide several advantages over previous architectures:

- Improved long-range dependencies: By enabling direct connections between distant words, attention mechanisms overcome the limitation of sequential processing.

- Parallelization: Self-attention allows for parallel computation, making the transformer highly efficient for both training and inference.

- Reduced contextual limitations: Unlike traditional recurrent models that suffer from vanishing or exploding gradients, attention mechanisms enable the model to access and utilize information from any part of the input sequence.

- Multi-head attention: The transformer employs multiple attention heads, enabling it to capture different types of dependencies and learn diverse representations.

Analyzing The Breakthrough Introduction Of Attention Mechanisms In The Transformer Model

The introduction of attention mechanisms in the transformer model marked a significant breakthrough in nlp. Let’s delve into the key aspects that made the attention mechanism in the transformer model so revolutionary:

- Replacing sequential processing:

- Traditional sequential models in nlp, such as recurrent neural networks (rnns) and convolutional neural networks (cnns), process input sequences sequentially, leading to computational inefficiencies.

- The transformer model, with its attention mechanism, enables parallel processing of words in the sequence, reducing training time significantly.

- Efficient utilization of context:

- Attention mechanisms allow the transformer to pay attention to different parts of the input sequence, assigning importance to relevant words while generating each output word.

- This dynamic utilization of context helps the model capture long-range dependencies effectively and produce more accurate predictions.

- Self-attention for capturing dependencies:

- The self-attention mechanism in the transformer model allows for capturing both local and global dependencies within the input sequence.

- By attending to different positions and calculating attention weights, the model can learn correlations between words, leading to enhanced contextual understanding.

- Attention heads for diverse representation:

- The transformer model incorporates multiple attention heads, enabling it to capture various types of dependencies.

- Each attention head attends to different parts of the input sequence, facilitating the learning of diverse representations and improving performance on a wide range of nlp tasks.

The breakthrough introduction of attention mechanisms in the transformer model has revolutionized the field of nlp, enhancing the performance and acceleratin

Multi-Head Attention: Expanding The Power Of Attention Mechanisms

Examining The Concept Of Multi-Head Attention And Its Significance In Transformer Models

Multi-head attention is a crucial component of transformer models, revolutionizing the field of natural language processing. It allows the model to focus on different parts of the input sequence simultaneously, enabling more robust and comprehensive modeling of dependencies. Let’s delve deeper into the concept and understand why it holds such significance.

- Multi-head attention involves splitting the input sequence into multiple representations, which are then processed by separate attention heads. Each attention head attends to a different subset of the input tokens, capturing distinct patterns and relationships.

- By incorporating multiple attention heads, the model can extract various types of information and capture different dependencies within the input sequence. This provides a more nuanced understanding of the context, making it highly effective for tasks like machine translation, sentiment analysis, and question answering.

- The outputs of multiple attention heads are then concatenated and linearly transformed, allowing the model to leverage the collective knowledge acquired by each head. This fused representation retains the benefits of individual attention heads while gaining a broader perspective.

- Performing multi-head attention in parallel improves efficiency, as the model can leverage parallel computing capabilities to process different attention heads simultaneously. This parallelization significantly speeds up computation, making transformer models more scalable and practical for real-world applications.

Understanding How Multi-Head Attention Allows Simultaneous Modeling Of Multiple Dependencies

One of the significant strengths of multi-head attention is its ability to capture multiple dependencies within a given sequence. Here’s how it achieves this:

- Each attention head in multi-head attention can specialize in modeling a specific type of dependency. For instance, one attention head may excel at capturing syntactic relationships, while another might focus more on semantic associations. By considering these diverse dependencies, the model gains a richer understanding of the input sequence.

- By allowing simultaneous modeling of multiple dependencies, multi-head attention avoids the limitations of sequential attention mechanisms present in traditional architectures. It enables the model to incorporate global context while attending to local information, resulting in more robust and accurate representations.

- The multi-head attention mechanism enhances the ability of the model to handle long-range dependencies, which are often challenging for traditional recurrent neural networks. It can effectively capture relationships between distant tokens, enabling better context understanding and improving performance across various natural language processing tasks.

Investigating The Benefits Of Multi-Head Attention In Overcoming Limitations Of Single Attention Heads

Multi-head attention overcomes the limitations of single attention heads by leveraging the collective power of multiple heads. Let’s explore some of the key benefits:

- Enhanced representation: By combining information from multiple attention heads, multi-head attention produces more expressive and rich representations. This allows the model to capture various aspects of the input sequence, leading to a deeper understanding of the context and improved performance.

- Regularization: The use of multiple attention heads introduces a form of regularization by introducing diversity into the representation. Each head attends to different parts of the input sequence, reducing overfitting and improving generalization.

- Robustness to noise: Multi-head attention helps in reducing the impact of noisy or ambiguous inputs. By attending to different parts of the sequence simultaneously, the model can mitigate the influence of erroneous information and focus on the most relevant aspects.

- Interpretability: With multiple attention heads, transformer models provide interpretability, allowing us to understand which parts of the input sequence are important for making predictions. This transparency is especially valuable in applications where explainability is important, such as medical diagnosis or legal analysis.

Multi-head attention expands the power of attention mechanisms in transformer models by enabling simultaneous modeling of multiple dependencies, enhancing representation, overcoming limitations of single attention heads, and improving the performance of natural language processing tasks. Its versatility, efficiency, and interpretability make it a groundbreaking innovation in the field of deep learning.

So, let’s appreciate the transformative capabilities of multi-head attention in advancing the world of ai.

Self-Attention: A Closer Look At The Inner Workings Of Transformers

Diving Into The Concept Of Self-Attention And Its Integral Role In Transformer Models

Self-attention is a fundamental concept at the heart of transformers, a type of deep learning model widely used in natural language processing tasks. It allows the model to focus on different parts of the input sequence during processing, enabling it to better capture relationships and dependencies within the data.

Here’s a closer look at the key points:

- Self-attention enables the model to calculate attention weights for each position within a sequence, considering the relationships between all positions simultaneously.

- By comparing each position to all other positions, self-attention captures the importance or relevance of each position to others in the sequence.

- The attention weights derived from self-attention are then used to weight the input sequence, giving more weight to positions that are deemed more important.

- This mechanism allows the model to incorporate contextual information from all positions, rather than only relying on a fixed context window, as seen in traditional sequence models.

- Self-attention is essential in capturing long-range dependencies, meaning that the model can effectively relate elements that are far apart from each other in the sequence.

- Compared to recurrent neural networks, which typically struggle with long-range dependencies, transformers excel at modeling these relationships through self-attention.

Exploring How Self-Attention Enables Capturing Relationships Between Different Positions Within A Sequence

Self-attention introduces a powerful way to capture the relationships between different positions within a sequence. Here are the key points:

- Rather than relying on fixed positional encodings, which may not adequately capture relationships, self-attention allows the model to adaptively learn the importance of positions based on their contextual relevance.

- Each position in the sequence is represented as a query, a key, and a value vector.

- By applying attention mechanisms, the model computes attention scores between each query and key pair, determining the importance of each key given the query.

- These attention scores are then used to weight the corresponding value vectors, allowing the model to selectively attend to different positions based on their relevance to the query.

- This adaptive attention mechanism enables the model to capture both local and global relationships within the sequence, enhancing its ability to understand complex patterns and dependencies.

Unveiling The Power Of Self-Attention In Modeling Long-Range Dependencies And Capturing Contextual Information

One of the major strengths of the self-attention mechanism is its ability to model long-range dependencies and capture contextual information. Here’s what you need to know:

- Traditional sequence models struggle with capturing long-range dependencies as they rely on recurrent connections that might fail to propagate information over long distances.

- Self-attention solves this problem by allowing the model to directly attend to any position in the sequence, regardless of its distance from the current position.

- By attending to relevant positions, the model can incorporate contextual information from different parts of the sequence without being limited by fixed window sizes or recurring connections.

- This enables the model to understand the broader context and relationships between elements in the sequence, leading to improved performance in various natural language processing tasks.

- Self-attention enables transformers to encode rich contextual information, making them highly effective in tasks such as machine translation, sentiment analysis, and language understanding.

Self-attention plays a pivotal role in transformer models by enabling them to capture relationships between different positions within a sequence. It allows the models to effectively model long-range dependencies and capture contextual information, leading to their success in various natural language processing tasks.

Natural Language Processing: Revolutionizing Language Understanding

Attention mechanisms have become one of the most transformative technologies in the field of natural language processing (nlp). These powerful mechanisms have revolutionized the way we understand and process language, paving the way for significant advancements in various nlp tasks.

In this section, we will delve into the impact of attention mechanisms on machine translation, text summarization, and question answering systems. Additionally, we’ll explore real-world applications where attention mechanisms have significantly improved performance and accuracy.

Examining How Attention Mechanisms Have Transformed Natural Language Processing Tasks

- Attention mechanisms have revolutionized the way nlp models understand and process language.

- These mechanisms allow models to focus on specific parts of a sentence or document, mimicking the human cognitive process.

- This attention-based approach has significantly enhanced the performance of various nlp tasks, such as machine translation, text summarization, and question answering systems.

Analyzing The Impact Of Attention Mechanisms On Machine Translation, Text Summarization, And Question Answering Systems

- Machine translation: Attention mechanisms have greatly improved the quality of machine translation by enabling models to selectively attend to relevant parts of the source sentence while generating the translated output.

- Text summarization: Attention mechanisms have made text summarization more accurate by allowing models to identify the most salient information in a document.

- Question answering systems: With attention mechanisms, question answering systems can better align questions with relevant parts of the text, improving overall accuracy and efficiency.

Showcasing Real-World Applications Where Attention Mechanisms Have Significantly Improved Performance And Accuracy

- Sentiment analysis: Attention mechanisms have proven invaluable in sentiment analysis tasks, as they help models recognize key words and phrases that contribute to the overall sentiment expressed in a text.

- Named entity recognition: By utilizing attention mechanisms, models can more accurately identify and classify named entities in a text, such as people, organizations, and locations.

- Document classification: Attention mechanisms have enhanced document classification tasks by allowing models to focus on important sections of a document, leading to more accurate predictions.

- Machine comprehension: Attention mechanisms have revolutionized machine comprehension tasks by enabling models to align questions with relevant parts of the text, improving their ability to accurately answer questions.

Attention mechanisms have played a pivotal role in transforming natural language processing tasks. They have not only enhanced the performance and accuracy of various nlp applications but also opened up new possibilities for developing advanced language understanding models. With the continued advancements in attention-based approaches, the future of nlp looks more promising than ever before.

Computer Vision: Enhancing Visual Representation Learning

Investigating The Application Of Attention Mechanisms In Computer Vision Tasks

Attention mechanisms have revolutionized the field of computer vision, enabling more accurate visual representation learning. By mimicking human visual attention, these mechanisms allow models to focus on relevant features in an image and ignore irrelevant ones. Let’s delve deeper into the key points:

- Attention mechanisms in computer vision: These mechanisms have been extensively investigated and applied in various computer vision tasks, such as object recognition, image captioning, and image generation. They enhance the performance of these tasks by improving the model’s ability to capture crucial visual information.

- Focusing on relevant features: Attention mechanisms enable models to attend to specific parts of an image that are important for recognition or understanding. By focusing on these regions, models can extract more informative features, leading to better classification and localization.

- Improving object recognition: Attention mechanisms play a vital role in object recognition tasks. They allow models to emphasize discriminative regions, improving their ability to accurately identify and classify objects within an image. This helps in overcoming challenges such as occlusion, scale variations, and cluttered backgrounds.

- Breakthroughs in image captioning: Attention mechanisms have significantly advanced the field of image captioning. By attending to different regions of an image while decoding, models can generate more accurate and descriptive captions. This has greatly improved the quality of generated captions, making them more coherent and contextually relevant.

- Advancements in object detection: Attention mechanisms have also contributed to substantial improvements in object detection algorithms. By assigning higher weights to region proposals that contain objects of interest, models can effectively filter out irrelevant regions and achieve more precise localization. This has led to significant progress in object detection performance across various datasets.

- Enhancements in image generation: Attention mechanisms have been instrumental in improving image generation tasks, such as super-resolution and inpainting. By attending to relevant regions or features, models can generate more realistic and visually pleasing images. This has opened up possibilities in generating high-resolution images from low-resolution inputs and filling in missing parts of images accurately.

- Evolving research and future potential: The application of attention mechanisms in computer vision is a rapidly evolving field. Ongoing research continues to explore new ways of incorporating attention into models and extending its capabilities. This holds promising potential for further improvements in various computer vision tasks.

As attention mechanisms continue to push the boundaries of computer vision, we can expect even more remarkable breakthroughs in image understanding, enabling machines to perceive the visual world with greater accuracy and context-awareness.

The Future Of Attention Mechanisms In Deep Learning

Speculating On The Future Advancements And Applications Of Attention Mechanisms

Attention mechanisms have already made a significant impact on the field of deep learning, revolutionizing how models process and understand complex data. As we look ahead, it becomes clear that attention mechanisms will continue to play a critical role in driving innovation and furthering advancements in this domain.

Here are some key points to consider when speculating on the future of attention mechanisms:

- Enhanced models for natural language processing: Attention mechanisms have proven to be highly effective in improving language models, allowing for better understanding and generation of text. In the future, we can expect even more sophisticated attention-based models that will enable machines to understand and generate human-like language with greater accuracy and contextual understanding.

- Improved image and video recognition: Attention mechanisms have already shown their power in image and video recognition tasks by enabling models to focus on relevant regions of the input. In the future, we can anticipate further advancements in attention-based models, leading to more accurate and robust object recognition, scene understanding, and video analysis capabilities.

- Attention-based reinforcement learning: Attention mechanisms have recently shown promise in the field of reinforcement learning, where agents learn to interact with an environment to maximize rewards. By incorporating attention mechanisms, models can selectively focus on important cues and leverage the temporal aspect of tasks, leading to improved performance and faster learning. In the future, attention-based reinforcement learning models are expected to enhance the capabilities of autonomous systems, enabling them to make smarter decisions in complex real-world scenarios.

- Interpretable and transparent ai systems: Attention mechanisms provide a way to interpret the decision-making process of deep learning models, making them more transparent and interpretable. This is of great importance in domains where trust and interpretability are crucial, such as healthcare and finance. In the future, attention mechanisms could be used to develop more interpretable models, allowing humans to understand and validate the reasoning behind ai-driven decisions.

- Cross-modal attention for multimodal tasks: Multimodal tasks, which involve processing and understanding information from different modalities like text, images, audio, etc., have gained significant importance in recent years. Attention mechanisms have been successful in tackling these tasks, but future developments are expected to further improve the cross-modal understanding and integration of information. Enhanced attention-based models will empower machines to seamlessly process and combine multimodal data, opening doors to new applications in areas like autonomous driving, virtual reality, and augmented reality.

Discussing Ongoing Research And Emerging Trends In Attention-Based Models

The field of attention-based models is dynamic, with ongoing research pushing the boundaries of what these mechanisms can achieve. Here are some key ongoing research areas and emerging trends related to attention mechanisms:

- Sparse attention mechanisms: The attention mechanism’s computational cost increases with the size of input data. To address this issue, researchers are exploring sparse attention mechanisms that allow models to focus on only a subset of the input. Sparse attention can significantly reduce computational requirements while maintaining high performance, making attention-based models more accessible and efficient.

- Transformer variants: Transformers are a popular architecture that heavily rely on attention mechanisms. Research is focused on developing variants of transformers that address their limitations, such as capturing long-range dependencies and handling sequential data. Variants like longformer, reformer, and performer are emerging as promising approaches to overcome these challenges, pushing the boundaries of attention-based models.

- Hierarchical attention: Hierarchical attention mechanisms enable models to attend to different levels of granularity within the input data, allowing them to capture information from both local and global contexts. This approach is particularly valuable in tasks with hierarchically structured data, such as document classification, where attention can be applied at the word, sentence, and paragraph levels.

- Attention with uncertainty estimation: Uncertainty estimation is crucial for building robust and trustworthy machine learning models. Researchers are exploring attention mechanisms that can explicitly model and quantify uncertainty. This enables models to not only attend to relevant parts of the input but also estimate the confidence associated with their predictions, thereby making the decision-making process more reliable and interpretable.

- Attention in generative models: Attention mechanisms are now being integrated into generative models, such as variational autoencoders (vaes) and generative adversarial networks (gans). By using attention, these models can focus on specific parts of the input when generating new samples, leading to more realistic and diverse outputs. Attention-based generative models have shown promising results in various domains like image synthesis, text generation, and music composition.

Emphasizing The Continued Impact And Potential Of Attention Mechanisms In Driving Innovation In Deep Learning Models

Attention mechanisms have revolutionized deep learning models by enabling them to focus on relevant information, improving performance in various tasks. The impact of attention mechanisms extends beyond their current applications, with vast untapped potential that will continue to drive innovation in deep learning.

Here are some reasons why attention mechanisms will play a crucial role in shaping the future of deep learning models:

- Improved performance and efficiency: Attention mechanisms have consistently demonstrated their ability to enhance model performance by selectively attending to relevant information. By focusing on important details and disregarding irrelevant or redundant data, attention-based models achieve better accuracy and efficiency, making them a cornerstone in many state-of-the-art deep learning applications.

- Enhanced interpretability: The transparency of deep learning models is a crucial factor in building trust between humans and machines. Attention mechanisms provide insights into which parts of the input are receiving more focus, allowing users to understand and validate the machine’s decision-making process. This increased interpretability facilitates the adoption of deep learning in critical domains like healthcare, finance, and autonomous systems.

- Flexibility across domains: Attention mechanisms have proven their effectiveness across a wide range of domains, including natural language processing, computer vision, and reinforcement learning. Their ability to adapt to different modalities and tasks makes them a powerful tool for addressing various challenges in the field of deep learning. This flexibility ensures that attention mechanisms will continue to be at the forefront of state-of-the-art models in diverse applications.

- Potential for further advancements: Ongoing research and emerging trends in attention-based models indicate that there is still much to explore and discover. From sparse attention mechanisms to attention in generative models, researchers are pushing the boundaries of attention’s capabilities. This continuous innovation promises even greater advancements and opens doors to new possibilities in deep learning.

As we look forward to the future, attention mechanisms will undoubtedly remain a driving force behind the advancement of deep learning models, bringing us closer to achieving more intelligent and capable ai systems.

—

Remember, this is a single section of a larger blog post, focusing specifically on the future of attention mechanisms in deep learning.

Frequently Asked Questions For Inside Transformers – A Deep Dive Into Attention Mechanisms

What Is A Transformer In Deep Learning?

A transformer in deep learning is a neural network architecture that utilizes attention mechanisms to process sequential data efficiently.

How Do Attention Mechanisms Work In Transformers?

Attention mechanisms in transformers allow the model to focus on relevant parts of the input sequence, providing a better understanding of the relationship between different elements.

What Are The Advantages Of Using Transformers In Natural Language Processing?

Transformers excel in natural language processing tasks due to their ability to capture long-range dependencies, handle variable-length inputs, and effectively model contextual information.

Can Transformers Be Used In Computer Vision Tasks?

Yes, transformers can be adapted for computer vision tasks by transforming the 2d image data into a sequence of patches or regions, making them suitable for tasks such as image classification and object detection.

How Have Attention Mechanisms Revolutionized Deep Learning?

Attention mechanisms have revolutionized deep learning by enabling models to process sequential data more effectively, improving performance in various tasks such as translation, text generation, and speech recognition.

Conclusion

Transformers have revolutionized natural language processing by introducing attention mechanisms that mimic human attention. This blog post has taken a deep dive into the inner workings of attention mechanisms in transformers and their significance in understanding language patterns. By breaking down the attention process and exploring concepts like self-attention and multi-head attention, we have gained insight into how transformers process and generate text.

These attention mechanisms allow transformers to focus on the most relevant information, improving the accuracy of contextual understanding and language generation. This is particularly relevant in tasks such as machine translation, chatbots, and text summarization. The efficiency and effectiveness of transformers have made them a powerful tool in modern nlp, driving advancements in various industries.

With further research and development, attention mechanisms and transformers will continue to shape the future of language processing, enabling machines to better understand and communicate with humans.