Robustness techniques ensure the reliability of machine learning systems. We will explore the various methods used to enhance the stability and consistence of these systems, guarding against performance degradation and handling unexpected inputs or noise.

By implementing these techniques, organizations can build machine learning models that consistently deliver accurate results, even in challenging and unpredictable environments. Through this comprehensive review, readers will gain insights into the importance of robustness in machine learning and learn about the approaches that can be employed to achieve and maintain reliable systems.

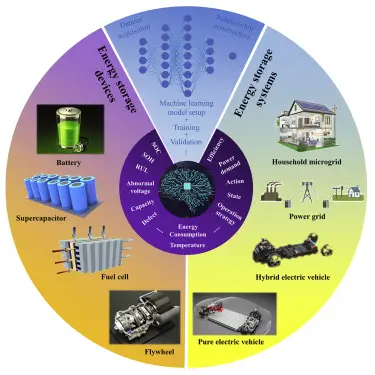

Credit: mesalabs.com

Understanding The Importance Of Robustness In Machine Learning Systems

Machine learning systems have become an integral part of various industries, from healthcare to finance, as they enable complex tasks to be automated and predictions to be made with high accuracy. However, it is crucial to recognize the significance of robustness in machine learning systems.

Robustness refers to the ability of a system to perform consistently and accurately, even in the face of unexpected or adversarial inputs. Let’s delve deeper into why robustness is critical for machine learning systems, the key challenges in ensuring robustness, and the impact of a lack of robustness on performance and accuracy.

Why Robustness Is Critical For Machine Learning Systems

Ensuring robustness in machine learning systems is vital for several reasons:

- Real-world scenarios: Machine learning systems are often deployed in real-world scenarios where they are exposed to various types of data. Robustness helps these systems to handle variations in data effectively, ensuring reliable performance.

- Adversarial attacks: In today’s digital landscape, machine learning systems can be vulnerable to adversarial attacks. An attack can involve intentionally manipulating the input data to mislead or exploit the system. Robustness plays a crucial role in detecting and mitigating such attacks, enhancing the overall security of the system.

- Generalization: Machine learning models are trained on a limited dataset, which means they need to generalize well to unseen data. Robustness assists in achieving better generalization, enabling reliable performance in different real-world scenarios.

- System reliability: Machine learning systems are often deployed in critical applications where reliability is of utmost importance. Robustness ensures that the system can handle unexpected situations, reducing the risk of failures and providing a more dependable user experience.

Key Challenges In Ensuring Robustness

Ensuring robustness in machine learning systems comes with its own set of challenges. Some of the key challenges include:

- Data quality and diversity: Obtaining high-quality and diverse data plays a significant role in building robust models. However, it can be challenging to collect sufficient and representative data, especially in domains with limited labeled examples.

- Imperfect models: Machine learning models are not perfect and can have inherent biases or limitations. These imperfections can undermine robustness, as they may not account for all possible scenarios or variations in the data.

- Adversarial attacks: As mentioned earlier, adversarial attacks pose a significant challenge to robustness. Crafting effective defenses that can detect and handle such attacks requires continuous research and innovation.

- Model interpretability: In order to ensure robustness, it is essential to understand how a model makes decisions. Lack of interpretability can hinder the ability to identify and address potential vulnerabilities or biases in the system.

Impact Of Lack Of Robustness On Performance And Accuracy

The absence of robustness in machine learning systems can have severe consequences on their performance and accuracy. Here are some of the potential impacts:

- Degraded performance: A lack of robustness can lead to degraded performance when the system encounters unexpected or outlier data. It may result in incorrect predictions or unreliable outcomes, impeding the system’s overall effectiveness.

- Vulnerability to attacks: Without robustness, machine learning systems may be more prone to adversarial attacks. These attacks can manipulate the system’s predictions, leading to incorrect decisions or unauthorized access to sensitive information.

- Bias amplification: Lack of robustness can exacerbate biases in machine learning systems. If the models are not robust to variations in the data, biases present in the training data can be amplified in the predictions, perpetuating unfair treatment or discriminatory outcomes.

- Loss of trust and credibility: Unreliable predictions or failures due to lack of robustness can erode trust in machine learning systems. Users may question the credibility of the system, leading to a reluctance to adopt or rely on its capabilities.

Understanding the importance of robustness and actively addressing the challenges associated with it are essential for building reliable and trustworthy machine learning systems. By prioritizing robustness, organizations can ensure the effectiveness and integrity of their machine learning applications in various real-world scenarios.

Techniques To Improve Robustness In Machine Learning Systems

Robustness Techniques For Reliable Machine Learning Systems

Machine learning systems have become an essential part of various industries, aiding in decision-making processes and providing valuable insights. However, these systems can sometimes be vulnerable to errors and inaccuracies, which can have significant consequences. To enhance the reliability of machine learning systems, various robustness techniques can be implemented.

In this section, we will explore some techniques that can improve the robustness of machine learning systems.

Data Preprocessing And Cleaning Techniques

- Handling missing data: Missing data can have a detrimental effect on the performance of machine learning models. Techniques such as mean imputation or interpolation can be used to fill in missing values.

- Removing outliers: Outliers are data points that deviate significantly from the rest of the data. These can skew the results of machine learning algorithms. Outliers can be identified using statistical methods like z-scores or the interquartile range, and then either filtered out or transformed.

- Dealing with imbalanced datasets: Imbalanced datasets occur when the number of instances in different classes is significantly different. This can lead to biased models. Techniques like oversampling and undersampling can be employed to balance the dataset.

Feature Engineering And Selection Methods

- Identifying relevant features: Feature selection helps in selecting the most informative and relevant features for the model. This reduces the dimensionality of the dataset and can improve the performance of the machine learning system.

- Feature scaling and normalization: Scaling and normalizing features ensure that all features have a similar scale, preventing some features from dominating the others. Common techniques include standardization and min-max scaling.

- Dimensionality reduction techniques: High-dimensional datasets can be challenging to process and can lead to overfitting. Techniques like principal component analysis (pca) and linear discriminant analysis (lda) can reduce the dimensionality and focus on the most important features.

Regularization And Cross-Validation Techniques

- L1 and l2 regularization: Regularization techniques, such as l1 and l2 regularization, introduce a penalty for large parameter values, preventing overfitting and improving model generalization.

- K-fold cross-validation: Cross-validation is a technique used to estimate the performance of a machine learning model. K-fold cross-validation divides the data into k equally sized folds and trains the model k times, using different combinations of training and validation sets.

- Dropout regularization: Dropout regularization is a technique that randomly drops some neurons during training, preventing over-reliance on specific features and promoting more robust models.

Ensemble Learning And Model Averaging Techniques

- Bagging and random forests: Bagging is a technique that combines multiple models by training each model on a different subset of the dataset. Random forests, a popular bagging technique, uses decision trees as base learners.

- Boosting algorithms: Boosting combines weak models into a strong model by sequentially training each model on instances that were misclassified by the previous models. Adaboost and gradient boosting are common boosting algorithms.

- Stacking models: Stacking involves training multiple models and combining their predictions through a meta-model. This allows the system to benefit from the strengths of each base model and can lead to improved performance.

By implementing these techniques, machine learning systems can become more robust and reliable. They can better handle outliers, missing data, imbalanced datasets, and high-dimensional feature spaces, resulting in more accurate and trustworthy predictions. Incorporating these techniques into the development process can significantly enhance the performance and effectiveness of machine learning systems.

Evaluating Robustness And Performance Of Machine Learning Systems

Robustness Techniques For Reliable Machine Learning Systems

Machine learning systems have become an integral part of various domains, including finance, healthcare, and autonomous vehicles. As these systems are deployed in critical scenarios, ensuring their robustness and performance becomes crucial. In this section, we will explore different techniques for evaluating the robustness and performance of machine learning systems.

Metrics For Assessing Robustness

When evaluating the robustness of a machine learning system, several metrics come into play. Let’s take a brief look at some of the key metrics:

- Accuracy and precision: Accuracy measures the overall correctness of the model’s predictions, while precision focuses on the proportion of true positive predictions in relation to all positive predictions.

- Recall and f1-score: Recall evaluates the ability of the model to identify true positive predictions, while f1-score provides a balance between precision and recall.

- Confusion matrix analysis: The confusion matrix is a powerful tool to evaluate the performance of a model by providing a comprehensive summary of true positive, true negative, false positive, and false negative predictions.

Evaluating Performance On Different Datasets

To have confidence in the generalization capabilities of a machine learning system, it is essential to evaluate its performance on different datasets. This involves dividing the available data into three sets:

- Training set: The training set is used to train the model and tune its parameters to achieve optimal performance.

- Validation set: The validation set helps fine-tune the hyperparameters of the model and assess its performance during the training process.

- Test set: The test set serves as an unbiased evaluation of the trained model’s performance on unseen data.

Cross-Domain Evaluation

Machine learning systems often face the challenge of performing effectively in diverse domains. Cross-domain evaluation involves testing the system’s performance on datasets from different domains and analyzing its ability to generalize well.

Adversarial Testing

Adversarial testing aims to assess the robustness of a machine learning system by deliberately introducing adversarial inputs. These inputs are specifically designed to exploit vulnerabilities and assess the system’s resilience against malicious attacks or unexpected scenarios.

Interpreting And Visualizing Robustness Results

Understanding and visualizing the robustness results provide valuable insights into the behavior of machine learning systems. Here are a couple of techniques that can help:

- Roc curves and auc analysis: Roc curves visualize the trade-off between the true positive rate and false positive rate, while auc (area under the curve) quantifies the overall performance of the model.

- Heatmaps and feature importance plots: Heatmaps provide a visual representation of the model’s decision-making process, highlighting the importance of different features in the predictions.

By employing these techniques, we can develop a deeper understanding of the robustness and performance of machine learning systems. This enables us to make informed decisions when deploying these systems in real-world scenarios, enhancing their reliability and trustworthiness.

Addressing Robustness Challenges In Real-World Machine Learning Applications

Robustness is crucial for ensuring reliable machine learning systems in real-world applications. Machine learning models encounter various challenges, such as noisy and unclean data, missing values and outliers, concept drift, adversarial attacks, bias, and changing environments. This section will explore some of the techniques and strategies to address these robustness challenges.

Handling Noisy And Unclean Data:

- Noisy and unclean data can have a detrimental impact on the performance of machine learning models.

- Techniques such as data cleaning, outlier removal, and noise reduction algorithms can help improve the quality of the data.

- Automated preprocessing techniques can be employed to identify and handle noise and outliers effectively.

Dealing With Missing Values And Outliers In Real-Time:

- Missing values and outliers are often encountered in real-time data streams.

- Online algorithms, such as data imputation and outlier detection methods, can be used to handle missing values and outliers in real-time.

- Adaptive techniques that adjust dynamically to changing data patterns can help address these challenges effectively.

Strategies For Data Augmentation:

- Data augmentation techniques involve generating additional training data by applying various transformations or modifications.

- Techniques like image rotation, flipping, cropping, and translating can artificially increase the dataset size and improve model performance.

- Care should be taken to ensure that data augmentation techniques preserve the integrity and diversity of the original data.

Robustness Techniques For Streaming Data:

- Streaming data poses unique challenges due to its continuous and high-velocity nature.

- Incremental learning algorithms that update models in real time can be utilized for handling streaming data.

- Techniques such as concept drift detection, ensemble methods, and adaptive learning can help maintain accuracy and adaptability.

Adapting To Concept Drift And Changing Environments:

- Concept drift refers to the change in underlying data distributions over time.

- Continuous monitoring of data and model performance is crucial to detect concept drift.

- Techniques like ensemble learning, adaptive learning, and transfer learning can help adapt the model to changing environments effectively.

Detecting And Handling Concept Drift In Real-Time:

- Real-time concept drift detection involves monitoring data streams and identifying significant changes.

- Statistical measures, change detection algorithms, and monitoring metrics can be employed for real-time concept drift detection.

- Prompt model updating, retraining, or ensemble adaptation can help handle concept drift and maintain system robustness.

Continual Learning And Adaptive Algorithms:

- Continual learning algorithms enable models to adapt and learn from new and incoming data.

- Incremental learning techniques, memory-based approaches, and knowledge consolidation methods can facilitate continual learning.

- Adaptive algorithms aim to dynamically adjust model parameters to changing conditions and maintain system robustness.

Robustness In Dynamic And Evolving Systems:

- Dynamic and evolving systems require models that can adapt to changing scenarios.

- Techniques like online learning, concept-based adaptation, and ensemble methods can ensure robustness in dynamic environments.

- Regular reevaluation of model performance and continuous updates are essential to meet evolving system requirements.

Mitigating Adversarial Attacks And Maintaining Fairness:

- Adversarial attacks aim to manipulate machine learning models and deceive their predictions.

- Techniques such as adversarial training, input sanitization, and anomaly detection can help mitigate adversarial attacks.

- Fairness in machine learning involves addressing biases and ensuring ethical decision-making without discrimination.

Defense Against Adversarial Attacks:

- Robust machine learning systems employ techniques like robust optimization, model diversification, and detection of adversarial examples.

- Adversarial examples are carefully crafted inputs that exploit vulnerabilities in machine learning models.

- Defense mechanisms like input transformation, ensemble diversity, and model interpretability can strengthen system resilience against adversarial attacks.

Fairness And Bias In Machine Learning:

- Bias in machine learning can result in unfair or discriminatory outcomes.

- Techniques such as fairness-aware learning, bias detection, and pre-processing methods can help address bias in training data.

- Establishing comprehensive evaluation metrics and guidelines can ensure fairness across different demographic groups.

Robustness Techniques For Biased Datasets And Models:

- Biased datasets can lead to biased models with unfair predictions.

- Techniques like dataset augmentation, data preprocessing, and sampling methods can be employed to mitigate biases.

- Regular reevaluation of the model’s performance on different demographic groups is essential to ensure fairness.

Deploying Robust Machine Learning Systems:

- Robust machine learning systems require careful deployment and maintenance strategies.

- Monitoring model performance, data quality, and system metrics are crucial for identifying potential issues.

- Regular model retraining and updating with new data can help maintain system accuracy and adaptability.

Monitoring And Maintenance Strategies:

- Regular monitoring of machine learning models is vital to detect performance degradation, concept drift, or potential attacks.

- Establishing an effective feedback loop based on real-time data can help maintain system robustness.

- Continuous evaluation, model versioning, and efficient model deployment pipelines ensure effective monitoring and maintenance.

Model Retraining And Updating:

- Model retraining with fresh data helps maintain model performance and adapt to changing conditions.

- Incremental learning techniques, transfer learning, and active learning can facilitate cost-effective model updates.

- Regular updates minimize performance degradation and enhance system robustness.

Ensuring Privacy And Security In Production:

- Privacy and security concerns in machine learning systems are crucial for protecting user data and maintaining trust.

- Anonymization techniques, secure data storage, and access control policies can help ensure privacy.

- Regular security audits, encryption, and secure model serving infrastructure are vital for maintaining system security.

Remember, addressing robustness challenges in real-world machine learning applications requires a multi-faceted approach. Employing a combination of techniques such as data preprocessing, adaptive algorithms, concept drift detection, fairness measures, and security practices ensures the development of reliable and trustworthy machine learning systems.

Frequently Asked Questions Of Robustness Techniques For Reliable Machine Learning Systems

What Are Robustness Techniques For Reliable Machine Learning Systems?

Robustness techniques are methods used to improve the resilience and accuracy of machine learning systems, ensuring they perform well even in challenging or unpredictable conditions.

How Do Robustness Techniques Enhance The Reliability Of Machine Learning Systems?

Robustness techniques, such as data augmentation and adversarial training, help machine learning systems handle variations, noise, and adversarial attacks, making them more dependable and trustworthy.

What Is Data Augmentation In The Context Of Machine Learning Robustness?

Data augmentation involves generating additional training examples by applying random transformations to existing data, enhancing the model’s ability to generalize and perform well on new, unseen instances.

How Does Adversarial Training Contribute To The Robustness Of Machine Learning Models?

Adversarial training involves exposing a model to carefully designed adversarial examples during training, enabling it to learn to recognize and handle deceptive inputs, improving its overall robustness.

Can Robustness Techniques Be Applied To Any Type Of Machine Learning System?

Yes, robustness techniques can be applied to various types of machine learning systems, including image recognition, natural language processing, and speech recognition, among others, to enhance their reliability and performance.

Conclusion

To ensure the reliability of machine learning systems, robustness techniques play a crucial role. By applying these techniques, we can make our systems more resistant to adversarial attacks, minimize the impact of data perturbations, and enhance their general performance. One such technique is adversarial training, which involves exposing the system to adversarial examples during the training phase to improve its ability to handle such attacks.

Another important technique is data augmentation, where we expand our dataset by adding variations of the existing data, helping the system to better generalize and make accurate predictions. Additionally, employing regularization methods such as dropout and weight decay can prevent overfitting, ensuring the system performs well on unseen data.

It is imperative for machine learning practitioners to carefully consider and implement these robustness techniques to enhance the reliability of their models. By doing so, we can build machine learning systems that are truly dependable and robust in real-world scenarios.